Five prominent rings.

Blue, yellow, black, green, and red.

It’s one of the most recognizable symbols globally – a hallmark of good design. Yet, designing an Olympic logo isn’t a walk in the park.

Striking a delicate balance between the host city and the revelry of the games is a tough act – although not unachievable. The logo of the 1964 Tokyo Games, designed by Yusaku Kamekura and Masaru Katsumi, is a stellar example of timeless design.

Why did the logo work?

Among other reasons, it embodied two crucial commandments in Dieter Ram’s principles (we’ll come back to this) of design: (a) Good design is long-lasting, and (b) Good design is as little design as possible.

Who doesn’t know the “land of the rising sun”?

As much as it captured the very essence of Tokyo, it also celebrated the spirit of sport.

Tokyo Olympic 1964

Across the world, the Allianz Arena in München, Germany, can accommodate 75,000 spectators. But, that’s not the only thing that’s impressive about it. Host to the opening ceremonies of the 2006 World Cup, it’s considered one of the best architectural structures. The stadium’s design emphasizes the procession-like walk of the fans toward the stadium.

Although a crater-like shape, the stairs on the outside lead to a great slope to the approach, so it looks like a swarm of ants making their way home from an aerial view. Thousands of fans walk shoulder to shoulder, the adrenaline rush is high, and there’s solidarity in the air. The exterior of the stadium also changes color. Each aspect of the stadium is a masterclass in innovative design.

This is to say that all designs must serve a purpose.

But, before we get there, let’s go back to the roots of designing to what UX design has become now. The objective has always been the same – to create a user-friendly experience.

It is the base of all design, whether in art, architecture, or digital spaces.

A Brief History of Design

According to an article published by Career Foundry, we can travel back to 6000 B.C. to start our journey in design. With the concept of Feng Shui implemented in living spaces, the idea was to move objects around to make life harmonious and optimal. Choosing the right colors too is an intrinsic part of Feng Shui as it affects a person’s mood.

Not too different from designing any user interface, is it?

By 500 B.C., alphabets had taken concrete shape – a milestone in designing and a breakthrough in communication. Modern-day design, efficiency, and the purpose of design as we see it now perhaps started with Toyota. It put the people and the workers in the forefront, encouraging a healthy lifestyle, a decent pay — actively incorporating suggestions and feedback.

They placed their employees at the heart – a critical step in defining user experience.

Had UX design finally seen the light of day? Perhaps, it did.

Cut to – the 70s – to Apple.

Xerox’s PARC research center deserves a special mention here though. The mouse and the graphical interface were boons that the center bestowed on the world and set the path for future personal computing that we’ve come to accept as necessities today.

Before the world relied on Siri or got used to the “Marimba” ringtone, Macintosh released Apple’s first PC with a graphical user interface, a built-in screen, and a mouse. Then, in 2001, teenagers found the only way to stay “cool” by playing around with the iPod click-wheel, till they landed on The Calling’s “Wherever You Will Go.”

It was a time of great UI, even better UX, and incredible music.

In 1995, Donald Norman, a cognitive scientist at Apple, coined “User Experience. At Apple, he worked as a User Experience Architect, the first there ever was.

In 2022, the term has evolved into so much more than just what looks good.

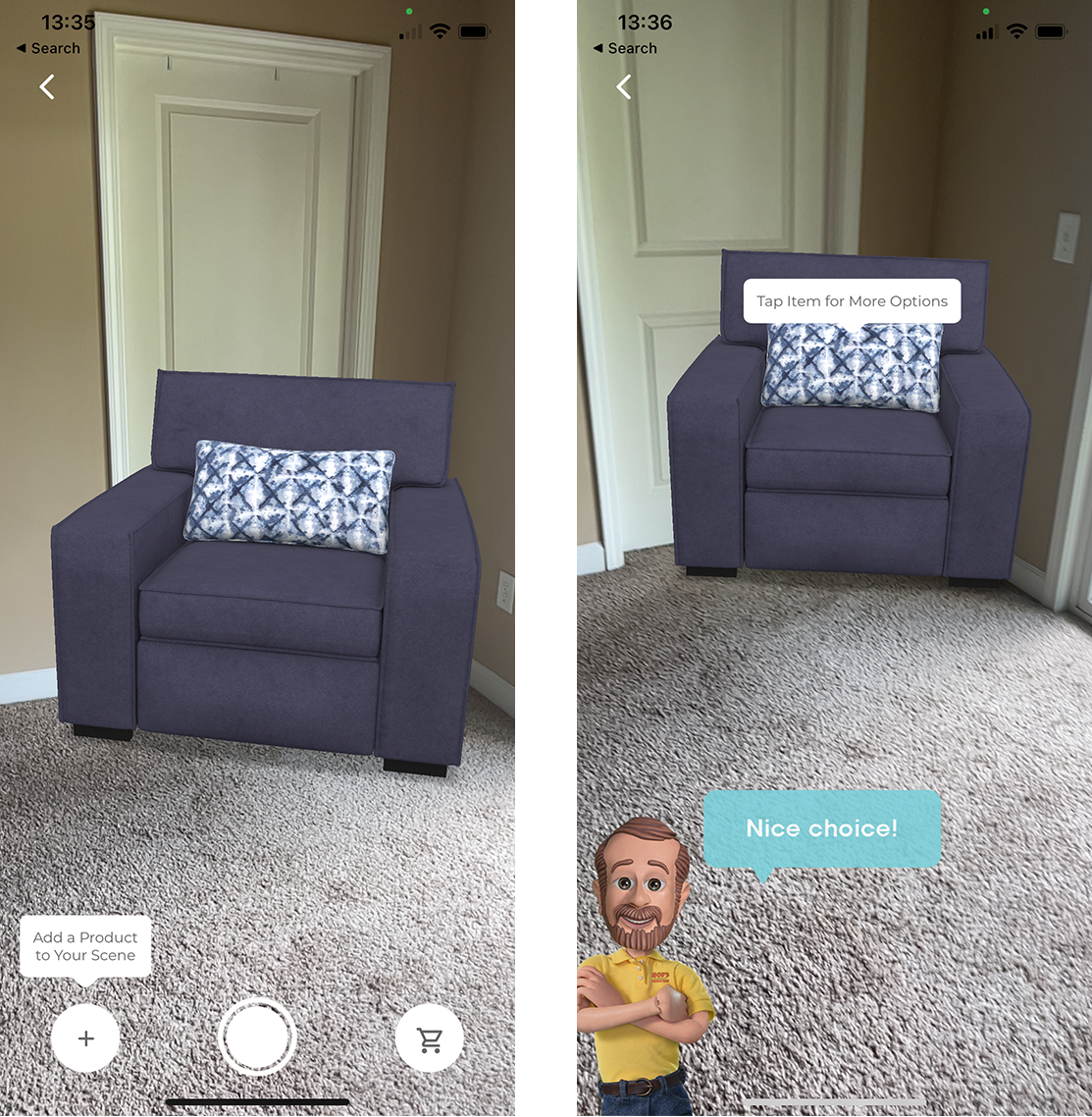

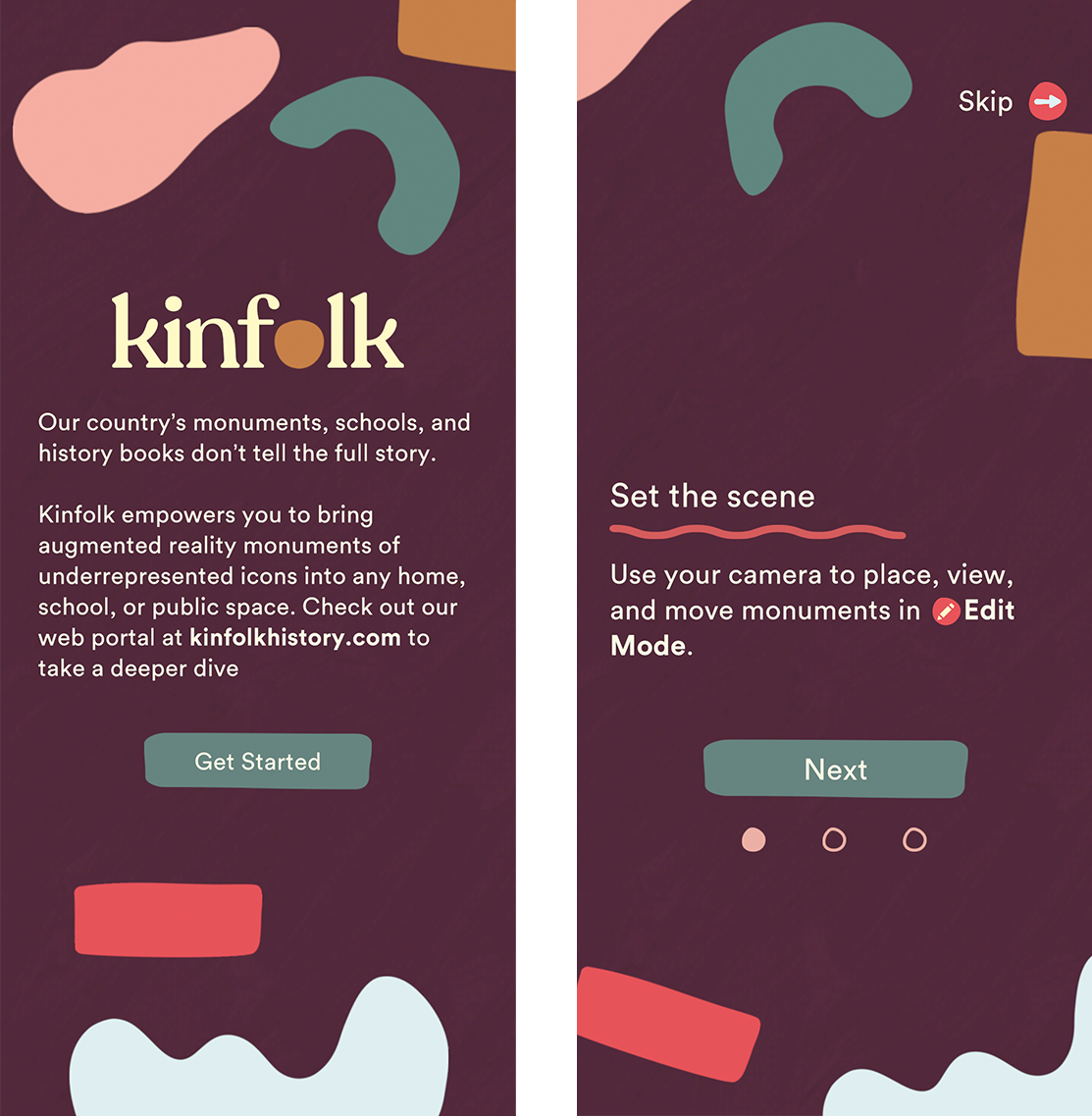

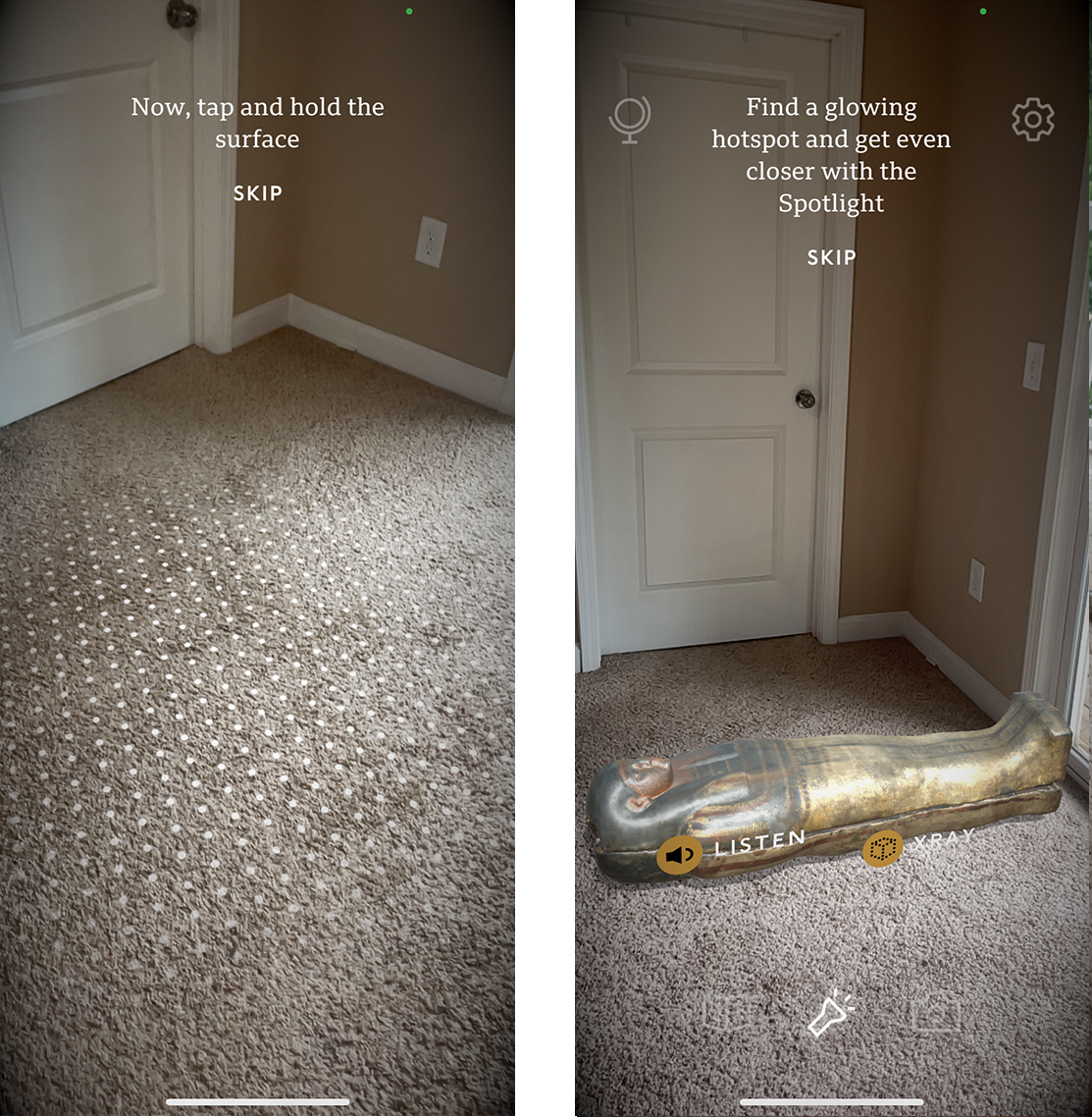

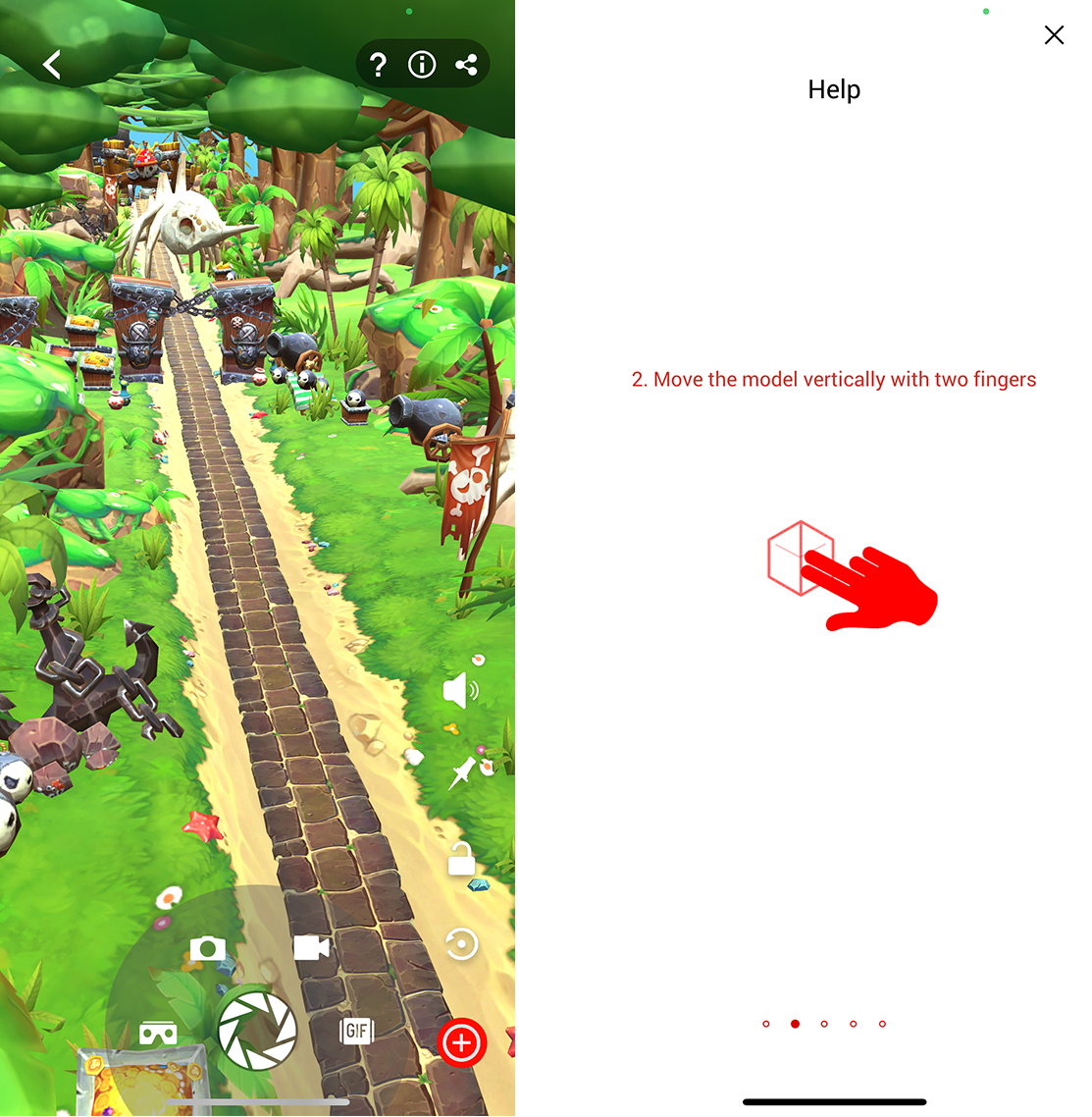

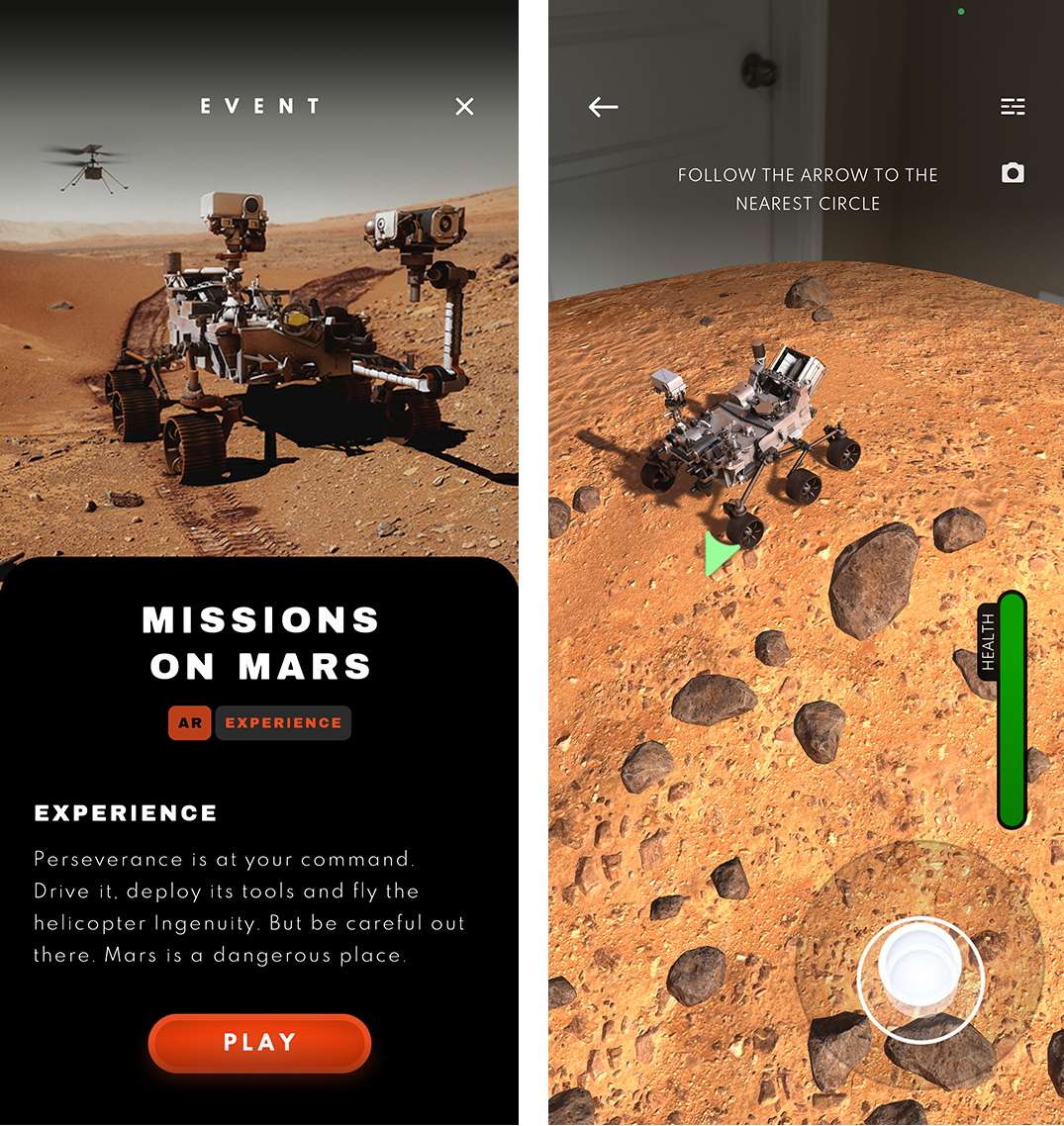

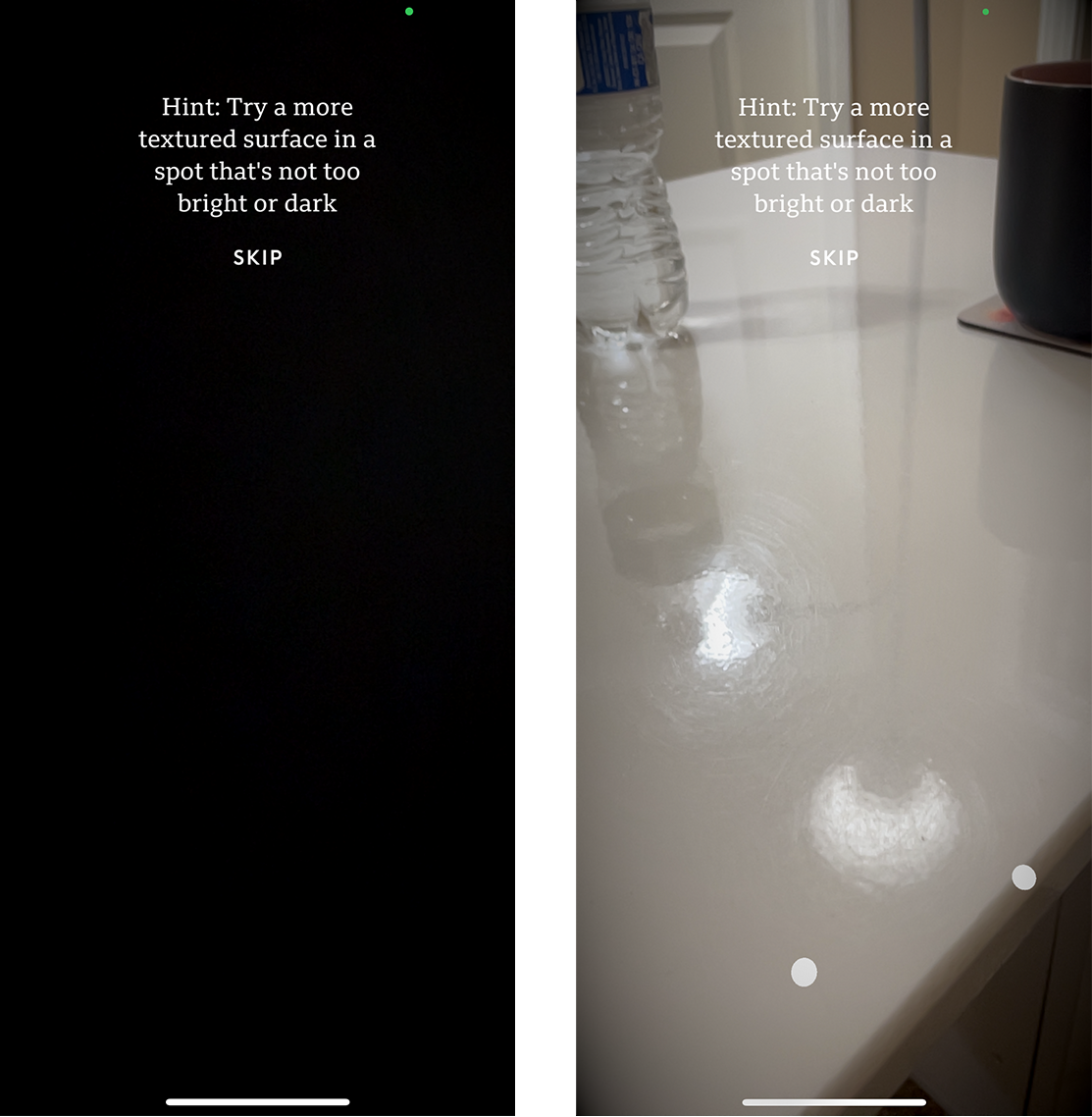

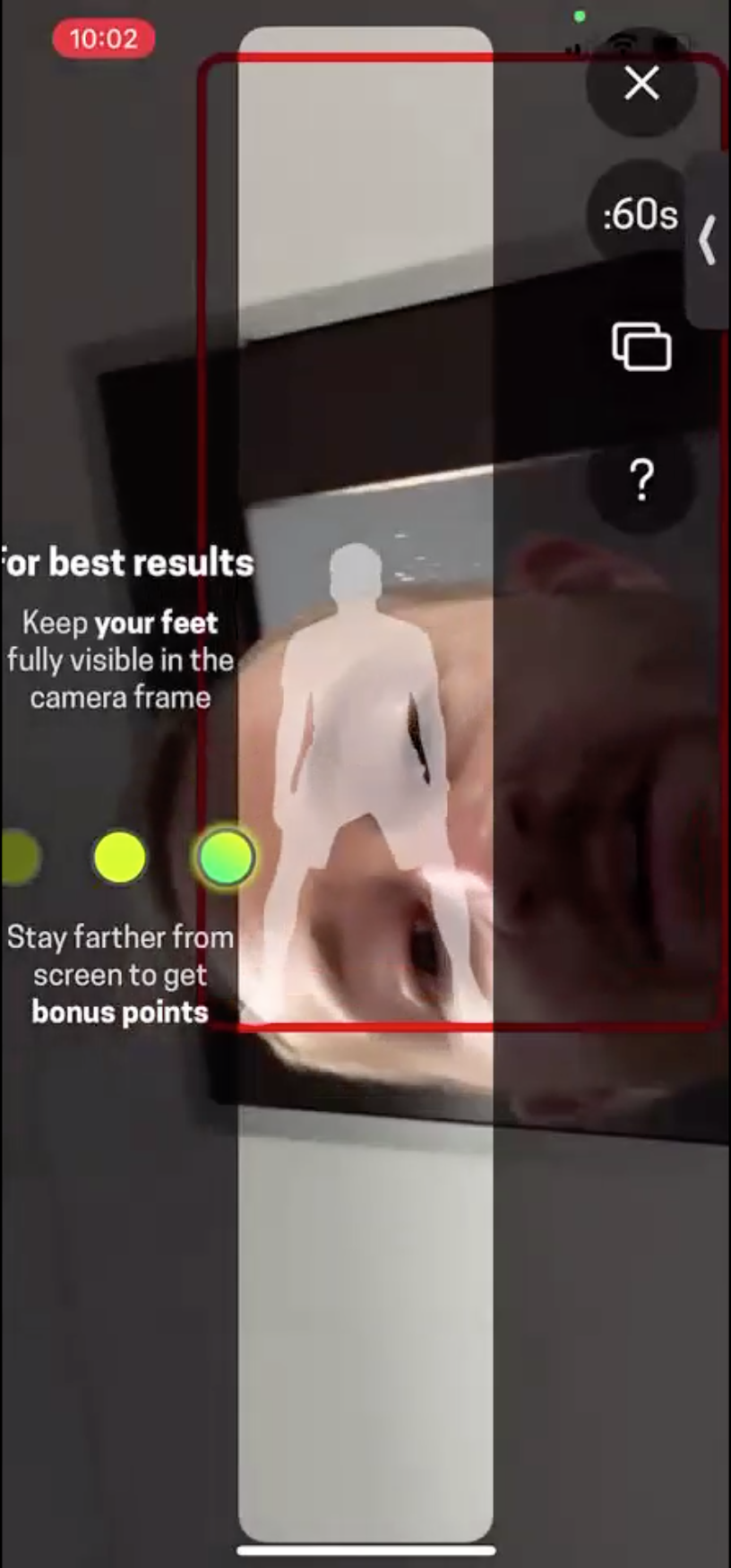

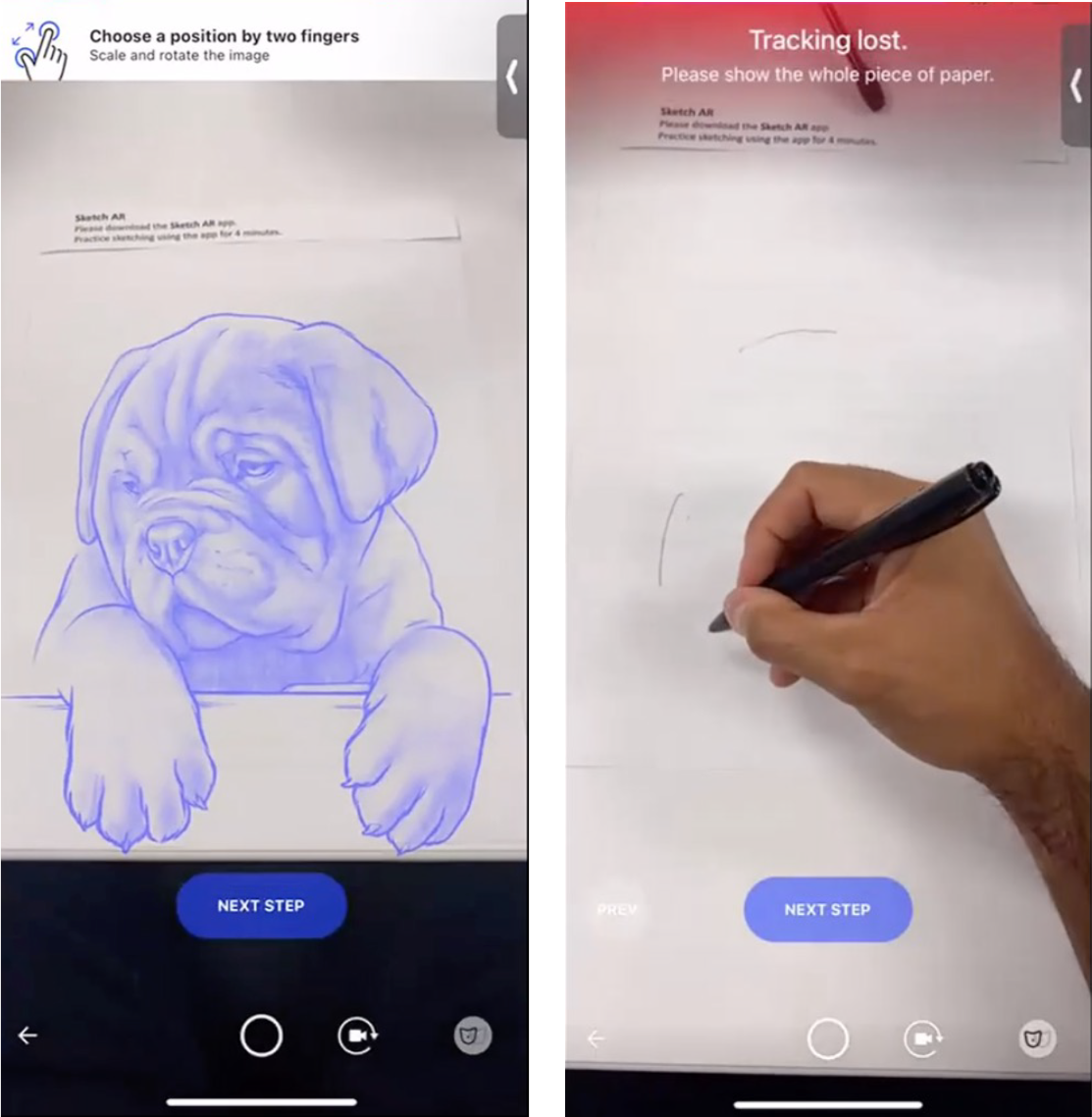

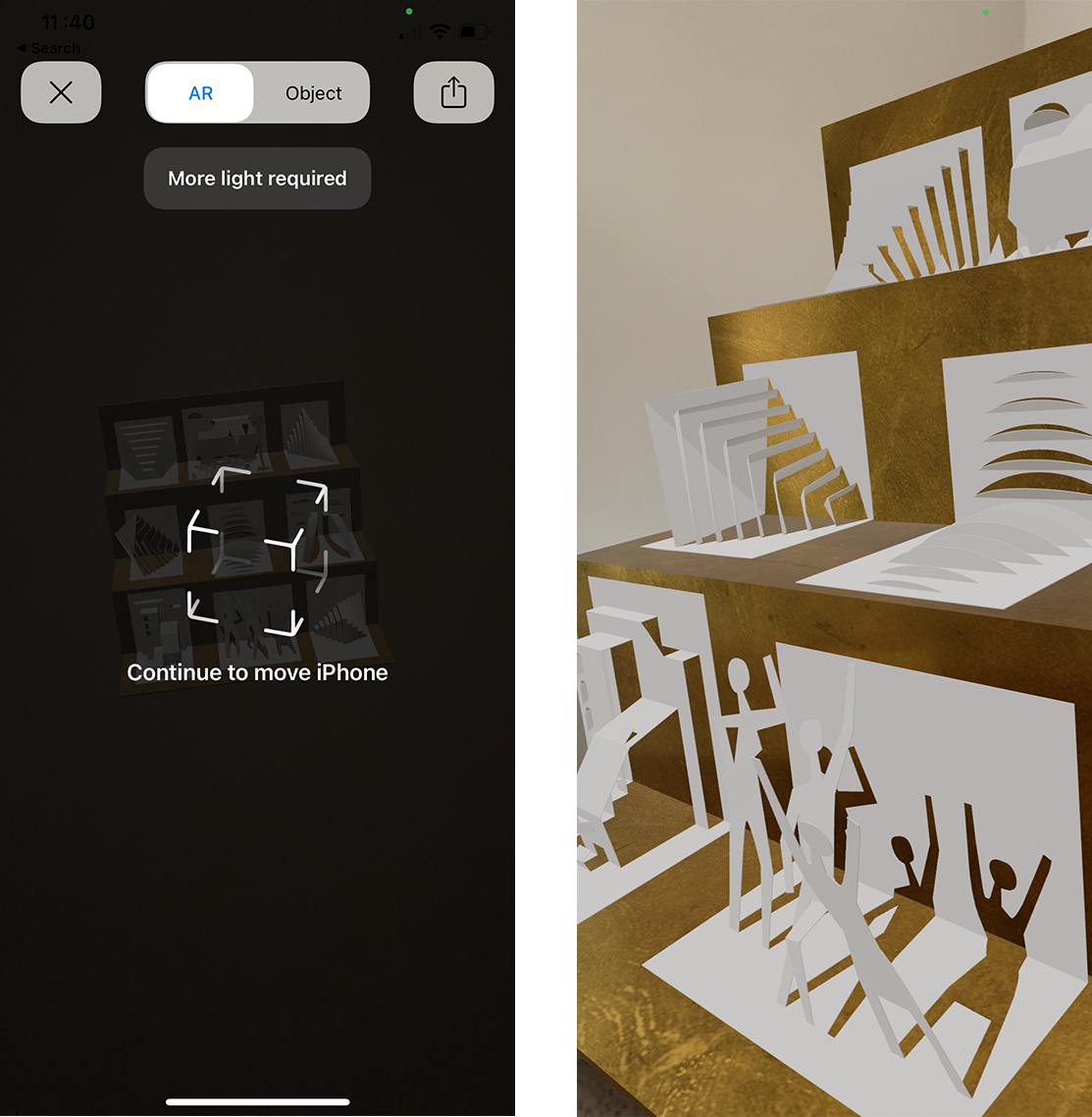

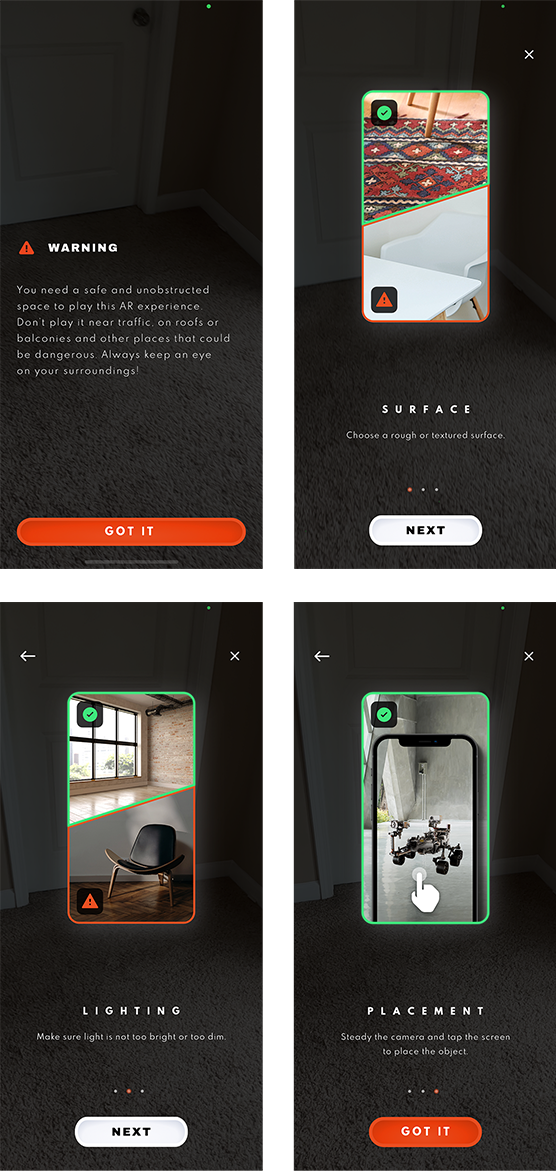

It’s a shape-shifting phenomenon that looks different every day. The focus now is on personalized and localized user experience with a heavy dose of augmented reality, artificial intelligence, data visualization, 3D elements, and responsive designs.

Now, let’s get to the meat.

Principles of UI/UX Design

The Pareto Principle

Ever heard of the 80/20 rule — eat 80% of the pie and leave the rest for the spouse – no, unfortunately, not that one.

The principle states that 80% of the effects of any process result from 20% of the effort that has gone into it. However, you might want to view it slightly differently in UX design. Suppose the 80% are your users and 20% are your features.

Bottom line – simplify interfaces. Get rid of the frills. Remove buttons or features that don’t contribute to the outcome.

The Gestalt Principle

The Gestalt Principle or Gestalt psychology are “laws of human perception that describe how humans group similar elements, recognize patterns and simplify complex images when we perceive objects.”

For designers, it’s crucial to understand and implement this principle to organize the layout of any interface and make it aesthetically pleasing.

Six common laws fall under the Gestalt Principle:

The human mind is wired to complete spaces in perceived incomplete shapes. Hence, we automatically fill in gaps between elements, so that the mind can accept them as one singular or whole entity.

Designers rely heavily on this law to create logos with negative spaces or make negative spaces look not as barren.

The human mind also groups elements that fall in the same closed region. To put this law to use, designers deliberately place related objects in the same closed area to show the difference from another set of closed areas.

An excellent way to create a common region is by placing elements inside a border.

Whether with lines, curves, or a sequence of shapes, our eyes tend to follow a natural path. A break in these elements might be jarring – a key learning for a designer. It may immediately drive a user away. Continuation also affects positive and negative spaces in design.

The objective is to create a flow that is easy to navigate.

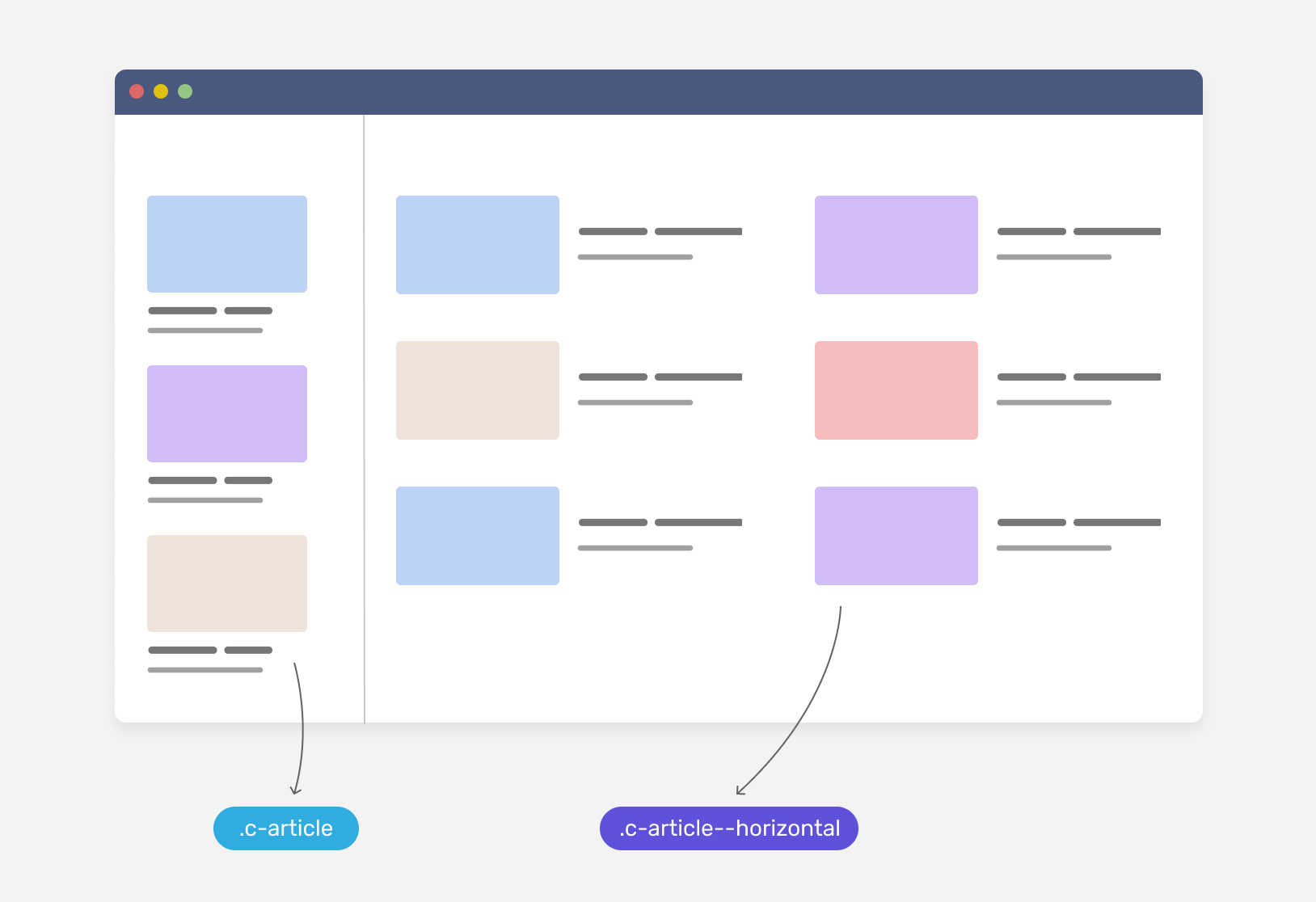

When designing an E-commerce website, ensure that navigation follows a linear path. In the example given below, one can quickly categorize and differentiate between primary and secondary navigation. Home, profile, shop, contact and help promptly stand out as one group while men, women, and kids are another.

- Figure/Ground (Multi-stability)

What do you see first? A vase or two faces?

What’s happening here is called the principle of multi-stability. The image can be interpreted in two ways, but our mind can only interpret one view in one go. Because it’s out of our conscious control, we can’t predict what and who will see the vase first or the two faces.

When posed with a dilemma like this one, our mind is quick to fight uncertainty and look for solid and stable items. But, in most cases, unless an image is truly abstract, the foreground catches our eye first.

In UX design, this principle is used in navigation panels, modals, and dialogs.

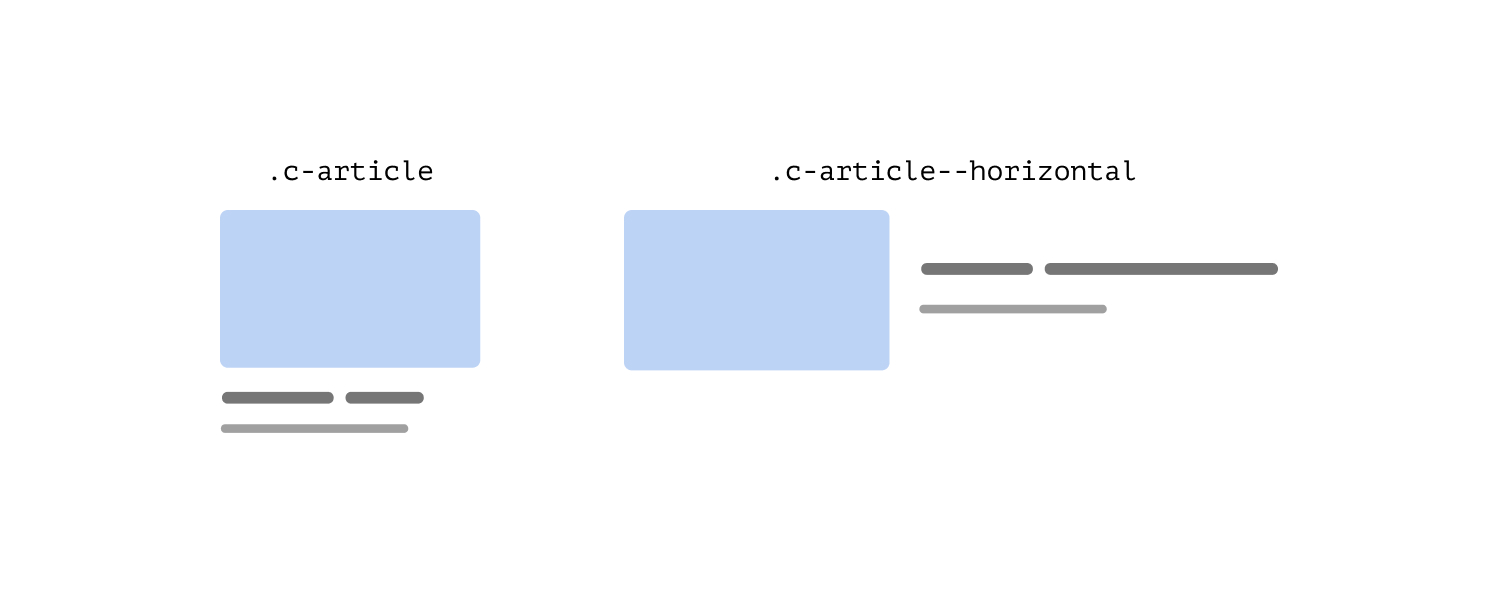

It’s the spatial relationship between elements inside a perceived or actual frame. To follow this rule, place things related close to each other and unrelated things further from each other.

You can also apply the same rule in the context of text. Sentences should be grouped in paragraphs and separated below and above by whitespace. Whitespaces around headings demarcate the beginning of a new topic or paragraph.

The invariance principle states that our brain finds similarities and differences in everything. This is why it’s easy to make something the center of attention in a crowd of similar objects. Imagine a wall full of black squares in different sizes and one solitary red square. Without realizing it, you created two groups in your head.

The fields and the button are the same sizes in the image below. However, the button is of a different color – this immediately prompts us to perform a specific function. We intuitively knew that the blue texts are links in the description text.

Understanding design principles provides designers with a good head start on their journey. But, there are 10 commandments of design by Dieter Rams that a designer must follow:

Dieter Ram’s 10 Commandments for Good Design

Good design is innovative

Developments in technology go hand-in-hand with those of UI and UX design – they supplement each other. As a result, there is always room for innovative design with new offerings in technology, especially when designing for the masses. However, innovative design doesn’t have to rely on technology alone. It can also benefit from shifting trends in user behavior.

Good design makes a product useful

The sole purpose of designing is to serve a practical purpose. When a design meets functional, psychological, and aesthetic criteria, it emphasizes the usefulness of a product.

Good design is aesthetic

Human beings are visual creatures and have relied on visual cues since the beginning of time to find food, shelter, mates, and the like. So, when designing a product, the aesthetic quality is integral to its usefulness and success.

Good design makes a product understandable

If you must explain a product and what it does; consider the battle lost. Good design describes the product’s structure as it is carefully laid out in the product itself. It should be self-explanatory and intuitive.

Good design is unobtrusive

In UX design, products will rarely take up ample physical space. Yet, good UX design seamlessly finds itself incorporated into our daily life. The design should be neutral and feel personalized.

Good design is honest

If your design attempts to manipulate the consumer – you should go back to the drawing board and start afresh. Good UI design has nothing to hide; it’s transparent.

Good design is long-lasting

Good design doesn’t attempt to be fashionable; it stays classic and never appears antiquated. Instead, it stands out as fresh even in a constantly changing world.

Good design is thorough down to the last detail

When designing a product, a designer must put himself in the user’s shoes. Starting a project by forcing a solution is not the way to go. Instead, focus on all the pain points and leave nothing out. Practice care and accuracy at every step of the design process.

Good design is environmentally-friendly

What can you do as a UI designer to make your designs more earth-friendly? For starters, you can choose an eco-friendly web host, power your website with a green energy source, and create simple designs. All of which will help reduce the carbon emissions of your website.

Good design is as little design as possible

Always strip down to the basics and keep what is crucial. The more the clutter, the more confused the user will be. Focus on reducing elements and buttons as it will help you concentrate on essential aspects and things that matter.

Getting the hang of it? There’s just one last thing we’ll cover now. Pay attention – it’s important.

UX Laws Every Designer Should Know About

Hick’s law

Hick’s law states that the more the choices – the more the user is spoilt for it. This directly increases the decision-making time as they are burdened with the complexity of options. To incorporate Hick’s law into your design, break complex tasks into smaller steps and minimize choice when response times are critical.

Sometimes, the user needs a little help. Highlight options as recommendations to help ease their user journey. However, be careful of what you’re subtracting or removing – you may miss out on crucial elements.

Fitts’s law

Fitt’s law simplifies the process for users even more. Think of it this way – the user wants to hit a bull eye at one shot, but the only difference is that the center of the target shouldn’t be a small red dot. It should be as large as possible.

Touch targets should be large enough so that users can accurately select them. Ensure that there is enough space between the touch target and other buttons so that movements are quick, deliberate, and precise.

Miller’s law

On average, Miller’s law states that a person can only retain seven items in their working memory. Suppose you are designing a navigation page – bombard the user with more than seven elements and chances are that they would most likely not recall the location they had arrived from.

This is often why services or products with several options are grouped to reduce the memory load.

Jakob’s Law

Jakob’s law states that users will often project expectations of other sites on yours. If they prefer a website for any reason, they will enjoy spending time on it. When they hop onto your site, they will expect a similar sense of aesthetic and satisfaction their preferred site offers.

While it may seem counter-intuitive, it may be a good idea to hover around benchmarks already set and not try to create something overtly unique.

Even when armed with all the knowledge in the world, mistakes are bound to happen. When designing for UX, designers often make the following mistakes. With everything we’ve learned, let’s figure out how we can avoid them.

UX Design Mistakes to Avoid

Inconsistencies

Inconsistency is a major turn-off for all, whether in life or UX designing. For instance, while using straight lines as dividers for icons, elements, or segments, ensure that the lines are thick or thin. If you’ve settled on a font, incorporate fonts of the same family throughout the product. When each element within your design creates what appears to be a consistent pattern, inconsistency breaks the pattern. The anomaly stands out in a jarring way.

Blurred lines between primary and secondary buttons

Not demarcating primary and secondary buttons is a good way to annoy a user – the biggest sin a designer can commit. Primary and secondary buttons exist as they serve a specific purpose. Highlight primary buttons in a strong color and add more visual weight to them.

Lack in text hierarchy

A lack in text hierarchy can also instantly break your design. Think of study notes you made in school while cramming for an exam. You capitalized the main topic, wrote over it to make it appear bold, and even drowned it in some fluorescent yellow highlighter. The important bits followed as sub-headings and then bullet points. A clear ranking of the most critical information to the least stood out most effectively. Apply similar practices for UX design and ensure that you let your text breathe with adequate spacing.

Not focusing on icons

Bad iconography can make a potentially successful design or product one that will instantly be forgotten. Why are icons important in UX design? Users recognize them instantly, and it helps them navigate better. Most importantly – icons save space. The purpose of an icon is to communicate a concept quickly. Hence, it’s best to stick to figures and images that resonate with the action it prompts. Line style, hand-drawn, and multi-color icons are all the rage in 2022.

Low-quality images

We’re in 2022 – visuals are everything. There is no excuse for you to settle for low-quality images. Your user most definitely won’t. While you’re at it, look for images that speak about your service or product and find high-quality images only. Staged and fake photos may land you in a hot mess, so look for realistic and creative photos.

Now, to stay abreast – let’s equip you with some UX trends for 2022.

2022 UX Trends to Keep an Eye On

- Simplicity wins. If there’s one thing you must learn from Dieter Rams – it’s simplicity. Whether we’re in 2022 or 3022 – one thing will remain constant. Simplicity; it will never run out of fashion. So, when designing a product, your sole aim shouldn’t be to chase everything that’s transforming around you. Start with the basics and come back to the basics.

- Delicate serifs will continue to reign, but now is an excellent time to experiment with typography. Go bold and go big. Keep in mind that it may appear boxy. Likewise, 70s-inspired disco fonts are making quite the comeback.

- Characterized by blurred backgrounds, Glassmorphism creates a frosted glass effect. To create this effect, place light or dark elements on colorful multi-layer backgrounds. As you add another layer of a blurry effect to the background of the elements, it appears as though it’s morphed into frosted glass.

- Were you aware that 22% of internet users buy groceries using voice assistants? If you didn’t, now is a good time to incorporate voice user interfaces in your design or product.

- Diversity and inclusivity shouldn’t just be buzzwords anymore. When designing a product, you must also think about how accessible it is for every audience member – including those with limited abilities. An all-inclusive design is the need of the hour.

Now that you’ve got this crash course under your belt, will you become the best designer the world has ever seen? Perhaps, not just yet – but you’ll become one that was better than yesterday. While following the laws and principles is crucial in understanding user experience and how best to design for the user, design thinking is the first step in designing UI.

More from AR Insider…

More from AR Insider…