How great research and poor social skills can give your work a bad case of “Schrödinger’s Research”

If you can’t draw a line specific research you conducted to a change in the product, you may be experiencing a case of “Schrödinger’s Research.”

Don’t sweat! Schrödinger’s Research is a common phenomenon in the user experience industry. It occurs when bright researchers conduct brilliant UX studies, but the insights never make it out of their brain and into the brain of their stakeholders.

It’s as if the research might never have taken place at all.

Schrödinger’s Cat is a famous thought experiment highlighting the observer effect in quantum physics. If a cat is in a box with poison and has a 50/50 chance of surviving, the cat could theoretically be alive or dead, or both, until someone looks inside the box and checks.

Just like Schrödinger’s Cat, your research could be useless, impactful, or both — but it just isn’t anything until someone looks inside the box.

An Argument for Waving Your Research Around Like A Fucking Flag

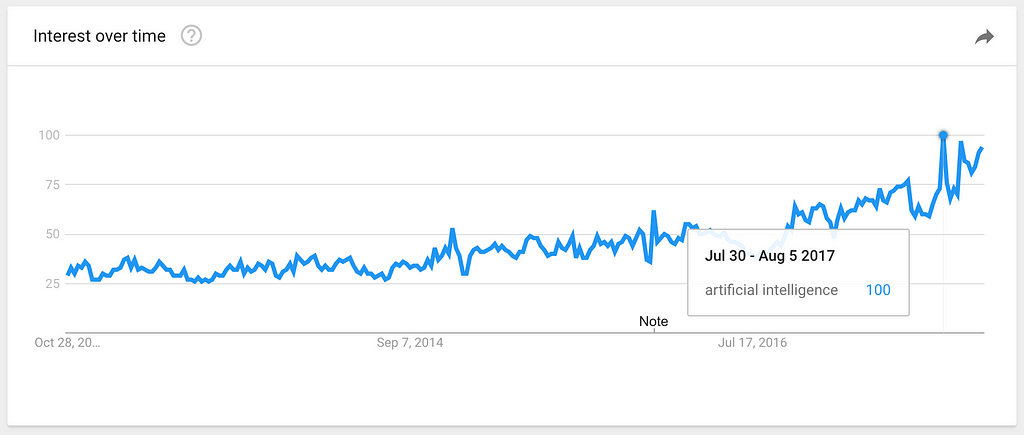

Companies like Facebook tie promotions and raises to “impact” employees have upon the project for a reason. Though this approach has a few downsides that I’ll explore in another article, it does prompt researchers to tie the impact of their research to specific product changes.

When this works well, it incentivizes researchers to more proactively share their findings and follow through on areas of interest with design and product management to make sure improvements ship.

When you have a bad case of Schrödinger’s research, this may mean you can’t prove you’ve had any impact at all on the product.

This article will cover the few major steps in ‘socializing’ your research — or proactively bringing it into the design or decision making processes.

In general, we socialize research for a few company-wide benefits:

- So the product is inspired by the user — user experience research findings shared early on in the design process can inspire elements of a design. This changes your product design process from “guessing and checking” to something more human centered.

- So the product team makes better decisions — if designers and product managers are aware of the limitations and preferences of their user, they’ll make better UI and interaction decisions the first time through.

- So the user becomes the ‘judge’ — teams that make decisions based on user experience research are less likely to steer towards what they personally prefer at the expense of the user. This also makes creative decision making more methodical by deferring to data rather than individuals on the design or management team.

Common Causes of Schödinger’s Research

Your team’s research blindness may be caused by any combination of: lack of awareness, ignorance, contempt, day drunkeness, or lack of bandwidth.

More likely though, there’s a problem with your method of communicating your findings.

- Your method of presentation is boring or hard to absorb — ex. dull, text-heavy slide decks or tons of confusing charts.

- Your distribution channels suck — ex. overcrowded Slack channels, un-used Wikis, un-navigable document folders.

- Your stakeholders don’t know the benefit of UX research —ex. they view research as a yes/no nod on a design, they’re sure they know what’s best for the user.

Show Me What You Got

Even if your research is great, pertinent, timely — it still doesn’t speak for itself. User research, especially at a large company, is a role that requires the researcher to act as a spokesperson and mouthpiece for the user.

Here are a few ways that you can better share and promote your research findings. If you have other methods you employ, please let us know more about them in the comments!

Make Your Findings Interesting & Usable

Above all, the information you communicate must be accurate and given the correct context (ie. sample size, statistical significance).

But once you’ve nailed accuracy, make that shit as colorful, eye-catching and incendiary as possible to get people to read and absorb it.

Here are a few tips on effective slide decks from: UX Research is Boring and Nobody Reads It.

- Use colors and themes to help stakeholders identify what information the research covers

- Include a TL;DR and Recommendation slide as an index and for skimmers

- Don’t just include that an element worked/didn’t. Tell the reader WHY

Make Your Research a Reference

If your research is unfindable, it can’t be used.

I create a research “Directory” for each product I work on with a team and circulate the link with pretty much everything I share. The Directory serves as an index of links. In the Directory, I include the following:

- Description of product — Work with the product manager to come up with a brief description of the product and its basic functionality. Include a picture if possible.

- Description of use case / user’s need — Briefly sum up what you know about the specific user and task at hand.

- Primary Research Questions — What stage of research is this team at? Are we checking concepts against each other? Already fine-tuning usability? Just identifying areas for improvement of efficiency on an older product?

- Links to Research and Design Resources — Include links to foundational research on user type/demographic trends, or the task the product approaches. Include usability test reports, noting the date and what part of the product was tested so stakeholders can jump to relevant data. Include any live test data

- Upcoming Studies — I list my upcoming research and information on how to watch online or attend. I try to invest time in getting team mates to watch research because it means better understanding, people to bat around thoughts with, and fewer corrections down the line.

- POC Information — List points of contact and contact information for engineering, design, product management, data and any other main stakeholders. This can help keep you from becoming the hub of all interactions.

Bring Your Research Directly To Your Stakeholder

I employ three main methods of sharing my research directly with peers.

1:1 Meetings

When I work on product teams I generally meet with the product manager once a week to gauge progress and priorities. I also meet 1:1 about once a week with the designers I work with to look at their most recent work and discuss edge cases and assumptions/concerns to explore in upcoming research.

1:1’s or small group meetings are also an excellent time to introduce the research you’ve just completed. You can answer questions and explore individual follow-up — for example, if a designer will need to re-think an interaction based on user research, you can let them know to plan extra time in their schedule to addres.

Research Read Out is a Terrible Name

Though I haven’t found a better way to refer to collecting people and sharing my research, Research Read Out is a deadly boring title for something awesome.

For each big research report (ex. a diary study or a set of surveys that reveal a trend) I like to organize a read out to share findings with context and answer questions.

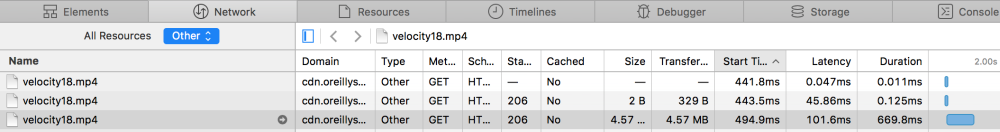

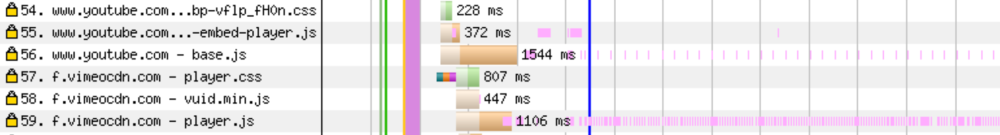

Schedule 45 minutes to 1 hour. Ensure you have video conferencing set up, share the link, and record the session for those who cannot be there. Go through your report slide-by-slide, adding a bit of color or real examples to provide context. Photos and video clips from testing are your friend.

Because I like to multitask and the audience is captive

I also use these sessions to assign work or formally call issues out to add to our development or design timelines. I ask people about future availability and put time on the calendar so we remember to follow up.

Don’t be scared. Jokes helps when you’re being this direct. So does owning up to your approach and your intensity.

Speaking up and sharing your research can only help you and your user. When people react negatively to my action-oriented approach, it is to critique my personality rather than my accuracy or effectiveness. A grown woman can survive some shade, especially when my products end up shipping and numbers go up.

Insert Your Findings in the Design Process

User research shouldn’t be conducted in a vacuum. The researcher can work hand-in-hand with the rest of the product team to make sure that the user is at the center of the whole process.

Here are a few of my favorite ways to inject your research and knowledge about the user into the the design process.

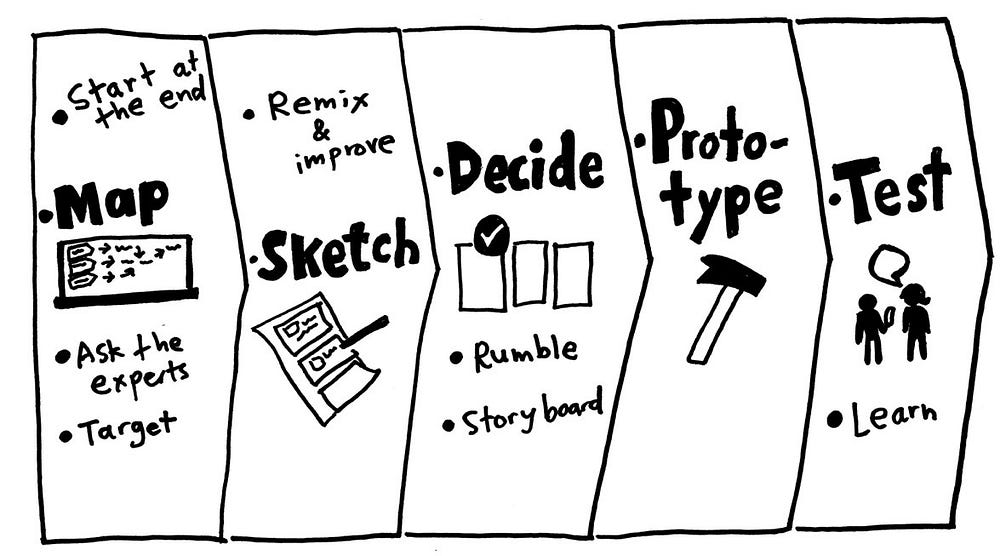

Run a Sprint

Once you’ve got a nest of related issues, (for example, all kinds of reports of issues with the login process), it could be time to recommend a design sprint to address the whole section.

Work with the design team to insert user research into the sprint. I like to start sprints with a brief overview of our background knowledge on the user, their preferences and limitations.

When I have the time to get really fancy, I incorporate a quick round of user testing into the end of a sprint so designers can see reactions to the earliest sketches of their work and adjust accordingly.

Get Brainstorm Priorities Right

When your team is planning their next round of work or next set of feature priorities, jump in and insert some research.

Start brainstorms off by listing user needs and user priorities, then discuss company goals and metrics. If you ask your design team to just jump in and “maximize new signups”, you run the risk of designing predatory dark patterns.

“How might we satisfy (USER NEED) in a way that (COMPANY PRIORITY). “

Insert Your User Into Decision Making

Ideally a team is on the same page when it comes to priorities and what gets recommended for build or shipping.

The user researcher’s responsibility is advise the design, check impact with data, and above all, help you user achieve their goals.

As such, feel empowered to speak up in decision making meetings to point people towards relevant data or knowledge that you think is important to know about the user.

For example, if the product is about to ship but you have concerns about people misunderstanding, it is okay to pump the brakes and point people towards the data that makes you feel that way.

In fact, it is your responsibility :)

Share What You Know

Do you have tips or ways you’ve effectively shared your research findings? Please tell us about them in the comments below!

- UX Research is Boring and Nobody Reads It — Tips and templates for communicating UX Research in a way that gets your team to enjoy and absorb it.

- Facebook Researcher Methods: talking about findings with your team — On-the-go debriefing methods to help your team make sense of the research they just observed.

- Sit Down and Speak Up: a UX researcher’s real role on a team — There’s waaaay more to UX research than just checking if the product sucks.

from Prototypr https://blog.prototypr.io/if-nobody-reads-your-research-did-it-really-happen-815bfca103ca?source=rss—-eb297ea1161a—4