Imagine you run an eCommerce website. You know that visitors find you in search engines and that they interact with your homepage. They even get started on your checkout process. But at some point, they do not convert. And you do not know why. It might be time to update the information hierarchy. Or the user flows. But how do you know what needs rejigging and what does not?

A User Experience Audit (UX Audit) is a way to pinpoint less-than-perfect areas of a digital product, revealing which parts of a site or app are causing headaches for users and stymieing conversions. As with financial audits, a UX audit uses empirical methods to expand an existing situation, and offer heuristics-based recommendations for improvements, in this case, user-centric enhancements. Ultimately, a UX audit should let you know how to boost conversions by making it easier for users to achieve their goals on your site or software.

This beginners’ guide to the UX audit aims to equip teams with the basics to conduct their audit, or to better understand the benefits and limits of an external audit.

What Happens During a UX Audit?

First up, the big questions. What exactly happens during a UX audit and how does it fit in with usability testing? During a UX audit, an auditor will use a variety of methods, tools and metrics to analyse where a product is going wrong (or right):

- Review of business and user objectives

- Conversion metrics

- Customer care data

- Sales data

- Traffic/engagement

- Compliance with UX standards

- Usability heuristics

- Mental modeling

- Wireframing & Prototyping

- UX Best Practices

The difference between usability testing and a UX audit is one of information flow direction: an audit infers problems from a set of pre-established standards or goals, whereas testing infers problems from user actions. Granted, an auditor may use usability testing during an audit if they do not have access to the fundamental metrics, but they will combine the results with data collected over the longer term and weigh them up against industry standards and product goals.

What can a UX Audit tell you, and what are its Limitations?

It is important to point out that a UX audit is not a panacea for all a site’s UX woes. It is ineffective if recommendations are not actionable, or are not followed up. It also requires a significant investment of time and labour, to the detriment (or at least delay) of other tasks when the internal team does the audit.

However, while a UX audit cannot solve all the problems of an ailing site or app, it can be used to answer some profound questions:

- What is working, and what is not?

- Which metrics are collected and which should be collected?

- What does the data tell you about user needs?

- What has already been tried, and what impact did it have on metrics?

An efficiently done UX audit incurs plenty of benefits for a product. It provides actionable follow-up activities based on empirical evidence, not hunches. It supports strategic design plans. It produces metrics that can be used in future tweaks. And it helps form hypotheses about why users act in a certain way, and how they might behave in the future. Most saliently of all, it contributes to boosting conversions and ROI once follow-up action is taken.

Who Should do a UX Audit, and When?

Tim Broadwater, writing on LibUX, sets out a good rule of thumb on when you might want to carry out a UX audit: “(an audit) should be conducted in the very beginning steps of a website, web application, dedicated app, or similar redesign project.” The word ‘redesign’ is key here; audits are usually carried out on a product or service that has been live for some time has a backlog of data to examine. New features and products are more likely to be put through their paces with usability testing rather than a holistic audit.

As a general rule, companies without a dedicated UX team stand to benefit most from a UX audit; those with an in-house team are most likely evaluating the product and tweaking the experience continually.

If cash flow allows, it is advisable to have external parties carry out the audit: it is hard for internal teams to get a distance between themselves and the product, and subconscious prejudices will hamper the process. Nate Sonnenberg gives a helpful outline of how much it costs to call in the auditors: upwards of $1000 for a couple of days with a one-person team; the full monty of a UX team coming in for four weeks and providing in-depth, goal-orientated insights could cost up to $10,000. But, according to Nate, in 2-3 weeks you will find 80% of issues, which is enough to get started.

However, all is not lost if the budget does not stretch to an external audit: it is possible to audit your product internally by following an objective process, utilising the wide variety of available tools and (if you are not already) becoming au fait with UX best practices and standards.

Let us take a look at what you need to get started on your UX audit.

What do You Need to Get a UX Audit Done?

You will want to involve a cross-section of the team – designers, developers, product strategists and business managers. Also, it helps to nominate an audit lead, who will take decisions on process and timeframe.

As with any other project, the following also have to be agreed from the get-go:

- Audit goals (conversion, ROI, etc.)

- A time limit, which is important because you could, theoretically, go on auditing forever

- How many resources you are willing to dedicate to the audit: time, workforce, money

An Overview of the Process

Once you have set the basics, it is time to adumbrate the process. Starting from a birds-eye perspective, the UX audit consists of six main stages: metrics and materials gathering; validation of results, the organisation of data; review of trends and tendencies; reporting of findings; creation of evidence-supported recommendations.

Metrics and Materials Gathering

The most difficult part of a UX audit is possibly the first step, the gathering of relevant materials. If goals were properly defined before embarking on the audit, you would know what kind of information you need; now you just need to think which metrics will provide you with that information.

Get team members on board in information sharing existing information and tracking useful metrics you do not currently have, and this step will be easier.

Here are some sources of metrics and materials helpful in an audit:

- A heuristic product evaluation: Conduct a cognitive walkthrough of the product to see things from a customer’s perspective. Take notes as you try to achieve user goals, and focus on identifying potential obstacles. Be aware that your knowledge of the product will make this task difficult – basing the process on established criteria, such as Nielsen’s heuristics, will help you stay focused.

- Website and mobile analytics: If a heuristic evaluation provides you with qualitative data, then analytics tools will provide the necessary quantitative information you need. Most people should be familiar with the basic functions of Google Analytics, such as traffic source, traffic flows and trends over time; more advanced functions can elucidate user flows within the website, conversion (and abandonment) hotspots and what users are doing before and after they visit your site. Tools such as Kissmetrics and Crazy Egg can supplement basic analytics with features such as heat maps and churn rates; app analytics can be collected either through Google mobile analytics, are through a dedicated tool such as Mixpanel. Make sure you are going far back enough in the analytics to recognise trends, rather than basing the audit on isolated data points.

- Conversion rates or sales figures: If the premise of your site or app is eCommerce, sales or download figures can be useful to a UX audit. For example, here at Justinmind, we measure how many blog readers download our prototyping tool and from which particular posts: this gives us an insight into how our content fits in with the wider user experience of Justinmind and whether we are meeting user pain points.

- Stakeholder interviews or user surveys: As with any UX endeavour, you have got to get out there and talk to real people. Start off by interviewing internal product stakeholders such as product owners and developers, questioning them for insights on the product’s plan, requirements and ongoing development challenges. You can also ask what they want to see out of the UX audit, which will generate faith and goodwill in the audit process. Also, find out if the marketing or sales department has ever conducted user surveys: there is likely to be a wealth of comment and feedback within these surveys that you can use in a UX audit. You can organise this feedback into categories – findings per screen or task, for example – and according to severity.

- Previous product requirements: Acquiring access to an application’s original requirements will save you time and help you understand why design decisions happened the way they did; this information will be useful when it comes to writing up viable recommendations.

At this stage, it is possible to pause and validate the qualitative data collected through usability tests. For example, if past user surveys revealed that the customer check-out process was complicated, conduct usability tests to see if you can back up this unsupported claim.

How to Organise the Materials you have Collected

In a word, spreadsheets. All the information obtained in step one should be tracked on a worksheet and aggregated. Upload the spreadsheet into the Cloud and make it a living, collaborative document where questions and ideas are recorded alongside relevant metrics.

If you are unsure about what you need to put in the spreadsheet, try out these helpful templates:

Look for Trends and Tendencies

The moment when you have to turn data into insights is often nerve-wracking: turning metrics into meaningful change is a profound issue that is beyond the remit of this article. Suffice to say that there are methods that will help you make sense of the information in front of you, such as data-mining, card sorting (not just for UX architects but also perfect for aggregating any mound of information) and insight incubation. Check out Steve Baty’s post in UXmatters for more on finding patterns in UX research.

Reporting of Findings

After mining data for insights it is time to develop hypotheses about the application’s user experience status: why do users act as they do instead of in the way stakeholders want them to. You can compare the insights you garnered against the following four keystones of successful products:

- Relevance: is the site or app addressing a user pain point? Is there a disconnection between expectation and reality when users find your product?

- Value proposition: is the value to the user clear and convincing?

- Usability: are there points of ambiguity or uncertainty in your product interface, or do customers’ intuitively understand what to do?

- Action: Are calls to action visible and relevant, and do they incentivise users to take action?

Creation of Evidence-Supported Recommendations

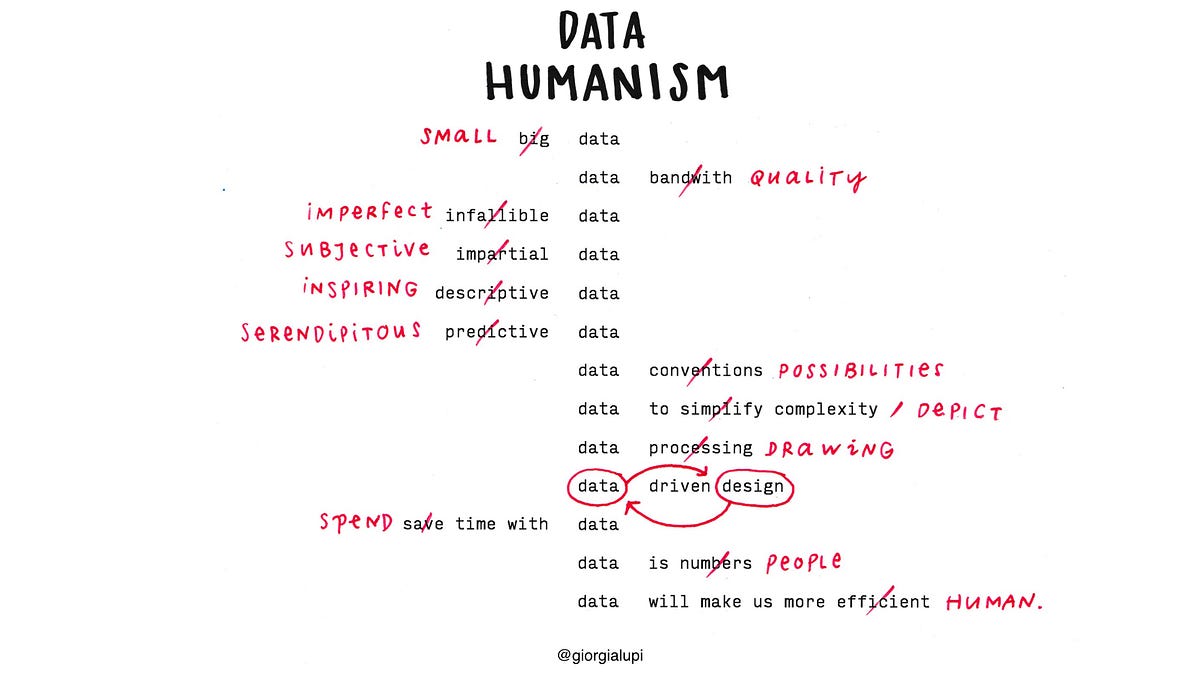

Finally, data-driven recommendations for UX improvements can be written. The key here is to make recommendations as applicable as possible. We are fans of the recommendations given by Joseph Dumas, Rolf Molich and Robin Jeffries in ‘Describing Usability Problems: are we sending the right message?‘:

- Emphasise the positive

- Express your annoyance tactfully

- Avoid usability jargon

- Be as specific as you can

On a more substantive level, do not forget to supplement recommendations with examples, rather than just identifying areas for general change. For example, in this sample UX audit report from Intechnic, recommendations include “for forms and resources, rearrange according to the number of clicks” and “in navigation drop-downs, remove images”. Suggesting solutions for the design-development team will always be a more positive, effective tactic than merely criticising where the application has failed on user experience.

Basic UX Audit Resource Kit

Your UX audit kit will obviously depend on the product you want to audit, and the final objectives that you’ve set out. However, the following resources and activities should equip you with everything you need to get started:

A Beginner’s Guide to the UX Audit – the Takeaway

A user experience audit requires significant investment in terms of time and human resources if done internally, and money if contracted out to professional UX auditors; it is not to be undertaken lightly. However, the benefits to an established site or app are visible, particularly if conversions are stagnant or slow-growing and the users’ voice is not represented in the product improvement process. By undertaking a UX audit, you can bring about significant, data-driven change to an application and see upticks in both user satisfaction and ROI.