Introduction

Staying current in the Javascript ecosystem is not for the faint of heart.

It’s challenging for those entering the industry to follow what’s happening amongst the new libraries, frameworks, concepts, and strong opinions.

It’s a good reminder that if you’re on the bleeding edge, you are usually the one bleeding. Defaulting to “boring” technologies, ones you are familiar with, and being a late adopter is often a great choice.

With that said, this post will get us up to speed on the bleeding edge of frameworks in the Javascript ecosystem.

We’ll make sense of the current landscape by looking at the past pain points when building large-scale web applications.

Rather than focus on the proliferation of solutions, we’ll dive into the underlying problems. Where each framework gives different answers and makes different trade-offs.

By the end, we’ll have a high-level model of popular frameworks like React, Svelte, Vue, Solid, Astro, Marko, Fresh, Next, Remix, Qwik, and the “meta frameworks” fit into today’s landscape.

It’s helpful to understand the past to make sense of the present. We’ll start with a trip down memory lane to see the path behind us.

This story’s been told before. This time we’ll focus on the problems on larger projects that sparked alternative approaches and ways of thinking.

A handwavy history of web pages

The web began as static documents linked together. Someone could prepare a document ahead of time, and put it on a computer.

The cool thing now was that somebody else could access it — without having to move their physical body to the same geographic location. Pretty neat.

At some point, we thought it would be cool to make these documents dynamic.

We got technologies like CGI that allowed us to serve different content based on the request.

We then got expressive languages like Perl to write these scripts. Influencing the first language explicitly built for the web – PHP.

The nice innovation with PHP was connecting HTML directly to this backend code. It made it easy to programmatically create documents that embedded dynamic values.

One of the most significant breakthroughs for the web was going from this:

<html>

<body>

This document has been prepared ahead of time.

Regards.

</body>

</html>

To having easily embedded dynamic values:

<html>

<body>

Y2K? <?php echo time(); ?>

</body>

</html>

Pandora’s box opened

These dynamic pages were a hit. We could now easily customize what we sent to users, including cookies that enabled sessions.

Server-based templating frameworks emerged across the language ecosystems that were now talking to databases. These frameworks made it easy to start with static pages and scale up to dynamic ones.

The web was evolving quickly, and we wanted more interactive experiences. For this we used browser plugins like Flash. For everything else, we would “sprinkle” Javascript fragments over the HTML served from the backend.

Tools like jQuery and Prototype cropped up and smoothed over the rough edges of web APIs and the quirks between the competing browsers.

Fast forwarding and hand waving. Tech companies were getting bigger, and as projects and teams grew, it was common for more business logic to creep into these templates.

Server code was being written to massage data into the server templating language. Templates often evolved into a mishmash of business logic that accessed global variables. Security was becoming a concern, with attacks like SQL injection commonplace.

Eventually we got “Ajax: A New Approach to Web Applications”.

The new thing you could do now was update the page asynchronously, instead of a synchronous refresh.

This pattern was popularized by the first big client-side applications like Google maps and Google docs.

We were starting to see the power of the web’s distribution for desktop-style software. It was a significant step forward compared to buying software on CDs down at the shops.

Javascript gets big

When node came around, the new thing it enabled was writing your backend in the same language as the frontend. All in async-first model developers were familiar with.

This was (and is) compelling. With more businesses coming online, the competitive advantage was being able to ship and iterate fast.

Node’s ecosystem emphasized reusing small single-purpose packages you could grab off the shelf to get stuff done.

The frontend backend split

Our appetite for a web that could rival desktop and mobile continued to grow. We now had a collection of reusable “widget” libraries and utilities like jQuery UI, Dojo, Mootools, ExtJs and YUI etc.

We were getting heavy on those sprinkles and doing more in the frontend. This often led to duplicating templates across the frontend and backend.

Frameworks like Backbone and Knockout and many others popped up. They added separation of concerns to the frontend via the MVC, MVVM et al. architectures, and were compatible with all the widgets and jQuery plugins we had collected.

Adding structure helped scale all this frontend code. And accelerated moving templates over from the backend.

We were still writing fine-tuned DOM manipulations to update the page and keep components in sync. This problem was non-trivial, and bugs related to data synchronization were common.

Angular, backed by Google, stepped onto the scene. It promoted a productivity boost by powering up HTML to be dynamic. It came with two-way data binding, with a reactivity system inspired by spreadsheets.

These declarative two-way bindings removed much of the boilerplate in updating things imperatively. This was nice and made us more productive.

As things scaled, it became hard to track down what was changing and often led to poor performance. Where cycles of updates would happen, occupying the main thread (today libraries like Svelte keep two-way bindings while mitigating their downsides).

Beside the rise of mobile, these productivity-boosting frameworks accelerated the frontend backend split.

This paved the way for exploring different architectures that emphasized this decoupling.

This was a major part of the JAMstack philosophy, which emphasizes pre-baking HTML ahead of time and serving it from a CDN.

At the time, this was a throwback to serving static documents.

But now we had a git-based workflow, robust CDN infrastructure that didn’t rely on a centralized server far away, and decoupled frontends talking to independent APIs. Chucking static assets on a CDN had much lower operational cost than operating a server.

Today, tools like Gatsby, Next, and many others leverage these ideas.

React rises

Hand waving and fast forwarding into the era of big tech. We’re trying to move fast and break things.

For those entering the industry, Javascript was big, and building a decoupled SPA backed by a separate backend was becoming the status quo.

There were a couple of challenges that React was born from at Facebook:

-

Consistency when data changes frequently: Keeping many widgets in sync with each other was still a significant challenge. A lack of predictability in the data flow made this problematic at scale.

-

Scaling organizationally: Time to market and speed were prioritized. Onboarding new developers who can get up to speed quickly and be productive was essential.

React was born and the cool new thing you could do was write frontend code declaratively.

Separation of concerns on the frontend was famously re-thought, where previous MVC frameworks didn’t scale.

Moving up from templates to Javascript-driven JSX was initially hated. But most of us came around.

The component model allowed for decoupling separate frontend teams, who could more easily work on independent components in parallel.

As an architecture, it allowed the layering of components. From shared primitives, to “organisms” composed up to the page’s root.

A unidirectional dataflow made the data flow easier to understand, trace and debug. It added the predictability that was hard to find previously.

The virtual DOM meant we could write functions that returned descriptions of the UI and let React figure out the hard bits.

This solved the consistency issues when data changed frequently and made the composition of templates much more ergonomic.

React at scale – hitting CPU and network limits

More fast forwarding. React’s a hit and has become an industry-standard — often even for sites that don’t need its power. At the far end of scale, we start to see some limits.

Running up against the CPU

The DOM was a problem with React’s model. Browsers weren’t built to constantly create and destroy DOM nodes in a continuous rendering cycle.

Like any problem that can be solved by introducing a new level of indirection, React abstracted it behind the virtual DOM.

People need to perceive feedback under like 100ms for things to feel smooth. And much lower when doing things like scrolling.

Combined with a single-threaded environment, this optimization becomes the new bottleneck in highly interactive applications.

Large interactive apps were becoming unresponsive to user input while the reconciliation between the virtual DOM and the real DOM happened. Terms like long task started popping up.

This led to an entire rewrite of React in 2017 that contained the foundations for concurrent mode.

Runtime costs adding up

Meanwhile moving faster meant shipping more code down the wire. Slow start-up times were an issue as browsers chewed through Javascript.

We started noticing all the implicit runtime costs, not only with HTML and the virtual DOM, but with how we wrote CSS.

The component model smoothed over our experience with CSS. We could colocate styles with components, which improved deletability. A fantastic attribute for anyone whose been scared to delete CSS code before.

The cascade and all it’s specificity issues we’d been fiddling with were being abstracted away by CSS in JS libraries.

The first wave of these libraries often came with implicit runtime costs. We needed to wait until the components were rendered before injecting those styles onto the page. This led to styling concerns being baked into Javascript bundles.

At scale poor performance is often a death by a thousand cuts, and we were noticing these costs. This has since led to new CSS in JS libraries that focus on having no run time cost by using an intelligent pre-compiler to extract stylesheets.

Network inefficiency and render-blocking components

When the browser renders HTML, a render-blocking resource like CSS or scripts prevent the rest of the HTML from displaying.

Parents often become render-blocking for child components in a component hierarchy. In practice, many components depend on data from a database and code from a CDN (via code-splitting).

This often leads to a waterfall of sequential blocking network requests. Where components fetch data after they render, unlocking async child components. Who then fetch the data they need, repeating the process.

It’s common to see “spinner hell” or cumulative layout shifts where bits of UI pop into the screen as they load.

React has since released Suspense to help smooth over the loading phases of a page. But by default, it does not prevent sequential network waterfalls. Suspense for data fetching allows the pattern of “render as you fetch”.

How does Facebook address these issues?

We’ll continue our detour to understand how some of React’s tradeoffs are mitigated at scale. This will help frame the patterns in the new frameworks.

-

Optimizing runtime costs

In React there’s no getting around the runtime cost of the virtual DOM. Concurrent mode is the answer to keeping things responsive in highly interactive experiences.

In the realm of CSS in JS, an internal library called Stylex is used. This keeps the ergonomic developer experience without the runtime cost when thousands of components are rendered.

-

Optimizing the network

Facebook avoids the sequential network waterfall problem with Relay.

For a given entry point, static analysis determines exactly what code and data need to load.

This means both code and data can be loaded in parallel in an optimized graphQL query.

This is significantly faster than sequential network waterfalls for initial loads and SPA transitions.

-

Optimizing Javascript bundles

A fundamental problem here is shipping Javascript that isn’t relevant to specific users.

This is hard when there are A/B tests, feature-flagged experiences, and code for particular types and cohorts of users. Also language and locale settings.

When there are many forking branches of code, a static dependency graph can’t see the modules that get used together in practice for specific cohorts of users.

Facebook uses an AI-powered dynamic bundling system. This leverages its tight client-server integration to calculate the optimal dependency graph based on the request at runtime.

This is combined with a framework for loading bundles in phased stages, based on priority.

What about the rest of the ecosystem?

Facebook has complex infrastructure and in-house libraries built up over many years. If you’re a big tech company, you can chuck an incredible amount of money and resources to optimize these trade-offs at the far scale.

This creates a pit of success for frontend product developers to get stuff done while maintaining performance.

Most of us are not building a suite of applications at Facebook’s scale. Still, at a lot of large organizations performance is the hot topic. We can learn from these patterns – things like fetching data as high up as possible, parallelizing the network, and using inline requires, etc.

Big tech companies often roll their own application frameworks internally. Leaving many solutions scattered across various userland libraries.

This has led to many having Javascript ecosystem fatigue and framework burnout.

The world of Javascript: scattered, divided, leaderless

Still with us? We’re in the era of SPA by default. This is the status quo for those entering the industry.

React is the undisputed champ, and we see the trade-offs at big scale.

React provided one layer. Leaving other necessary layers up to the ecosystem, causing churn in every vital aspect: routing, state management, data fetching, etc., each having its own concepts and APIs.

Immutable versus mutable, OOP with classes versus functional style, the debates and libraries bloomed.

Today, many developers are drowning in uncertainty around what choices to make and how to architect things.

Arise, arise riders of React alternatives!

Components were sticky. But the runtime costs, Javascript-driven JSX, and the complexity were up for debate.

Many grass-rooted alternatives, not from big tech companies, have gained widespread mind share. Let’s get a super high-level overview of them:

Vue

When people were assessing migrating to Angular 2 or React, Vue filled the gap of having a low barrier to entry to getting started.

You didn’t have to mess around with a complicated webpack config. You could pull it from a CDN and start building components using templates intuitive to many developers.

Core components like routing and styling were available for the core team, reducing decision fatigue.

It also mitigated aspects of React’s reconciliation algorithm by using static analysis on templates to allow optimizations for faster runtime. Referred to as a compiler-informed virtual DOM.

Svelte

Svelte pioneered the pre-compiled approach, removing complexity and overhead we were seeing at runtime.

The idea was to have a framework that compiles itself away, with a streamlined output of minimal vanilla JavaScript. All while maintaining a modern authoring experience based on declarative components with a familiar mutable style of Javascript.

It eschewed the virtual DOM entirely and thus wasn’t bound by the constraints of having an immutable style of writing Javascript to do things like updating state. For many, this was a much simpler and saner model of building stuff on the web.

Solid

Solid came with a straightforward and predictable reactivity model, inspired by Knockout. Like React, it eschews templates for ease in composability of functions.

While React takes the approach of constantly re-rendering the world. Solid renders once, and then uses a streamlined reactivity system to do fine-grain updates without the overhead of a virtual DOM.

Solid looks like what many of us React developers wished our new code using hooks looked like. Its API is perhaps more ergonomic, smoothing over many things like hooks dependency arrays, with its focus on fine-grained reactivity and composable primitives.

Learning from each other

There is much more to be said about each of these frameworks. Each makes different trade-offs based on their underlying models and preferences.

In reality, evolution often comes from people winging it as they go. Trying different solutions to current pain points, with each framework learning from the other.

A big theme is streamlining and simplifying. Moving things out of runtime to compile time is one of these themes, inspiring “React forget”, a feature to potentially remove the need for memoization.

What they all have in common is solving the interactive part of a document. As we’ve seen, this is a challenging aspect to get right in a way that scales easily.

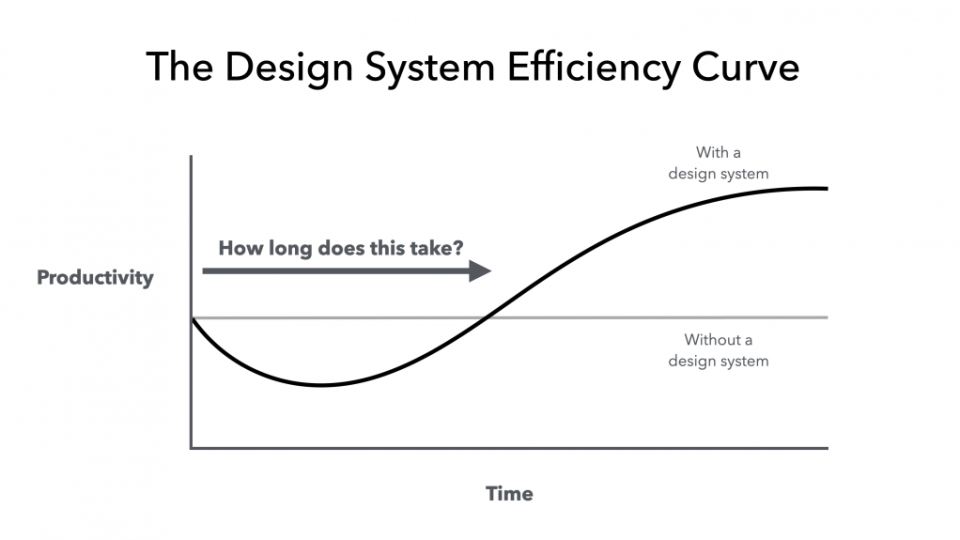

Meanwhile, we’re seeing the tradeoffs with pure client-side rendering. That blank white screen when loading a page is taking longer.

On mobile devices and networks, it’s a bit of a disaster.

For a lot of websites, moving fast, with fast performance that doesn’t degrade, becomes a major competitive advantage.

We took a step and were exploring ways to render content faster by rendering it first on the server (only to discover that it’s a tradeoff).

This initial step back sparked the way for many “meta” frameworks and a new wave of HTML-first frontend frameworks.

The new wave of Javascript web frameworks

We shall not cease from exploration. And the end of all our exploring will be to arrive where we started. And to know the place for the first time.

Inspired by PHP, Next stepped up to streamline the process of creating static pages pushed to a CDN. It also smoothed over the hairy parts of using SSR in React apps.

It came with some much-wanted opinions on structuring an app, using file-based routing. And a bunch of other nice features.

Since then a wave of other “meta” frameworks were created. For Vue, we have a similar framework in Nuxt. Svelte’s Sveltekit, and the up and coming SolidStart.

These are server-first, designed to integrate all pieces and ergonomics of a web framework. Not only the interactive element that has been in the spotlight for a long time.

The conversation starts to be about improving the user experience and the developer experience without trading one for the other.

The MPAs strike back

A multi-page architecture serves HTML from a server, where navigations are full page refreshes.

Fast startup is critical for many sites, especially those outside a login. It directly ties to things like search rankings and bounce rates.

Using a client-rendered library like React is overkill for many sites and apps with low interactivity.

For many this means flipping the script. Being HTML-first instead of Javascript-first, MPA over SPA, and defaulting to zero Javascript.

Frameworks like Marko, Astro, Fresh, Rocket, and Enhance and others bake in this approach.

In contrast to some of the meta-frameworks, the router stays on the server, rather than have the client side router take over after the first load.

In the Javascript ecosystem, this is a throwback to server-based templating shortly after Node.

This round of MPAs differ from previous generations. The “sprinkles” are authored in an component-based model, often using the islands pattern. In the same language across frontend and backend code. Often co-located in the same file.

Which removes the issue of duplicated templating code constructed differently across frontend and backend when adding in some sprinkles of interactivity.

The return of progressive enhancement

Remix brings a return to progressive enhancement in the React ecosystem.

From a technical perspective, is a compiler for React Router, and like other up and coming meta-frameworks, an edge-compatible runtime.

It addresses the same challenges Facebook solves at scale with Relay through its nested layout and data fetching API.

This allows early fetching of code and data in parallel. Which is a good prerequisite for the “fetch as you render” pattern with Suspense.

The emphasis on progressive enhancement means it’s APIs are based on web standards, with the data mutation story based on HTML forms.

Instead of wiring up event handlers to make imperative fetch requests. You render forms that submit data to action functions that process them on the server (often in the same file). Inspired by PHP.

Similarly to Next, applications can be scaled down to work without Javascript like a traditional server-rendered MPA, or scale up to an interactive React app on a per page basis.

Remix also provides many APIs and patterns for handling things like optimistic UI updates, handling of race conditions, and graceful degradation that you’d hope a well-thought-out framework that focuses on the end-user experience provides.

A hybrid future

Not to be confused with the Quic protocol. Qwik the framework is all about minimizing unnecessary Javascript.

While its API looks like React, its approach differs from the other meta-frameworks by honing in on the hydration process.

Just like how you can pause a virtual machine and move it to a different physical machine. Qwik takes this idea to the work that happens between the server and the browser.

It’s idea of “resumable” hydration means you can start something on the server and resume it on the client without any rework.

This is in contrast to partial hydration, which moves when the hydration work happens, where Qwik trys to avoid doing it in the first place.

It’s an interesting set of ideas leveraging the power of having a tight server and client integration that allows this kind of dynamic bundling and serving.

These concepts start to blur the line between MPA and SPAs, where an application can start as an MPA and transition to a SPA dynamically. Sometimes referenced (in more buzzwords) as “transitional apps”.

Life at the edge

Meanwhile backend infrastructure and hosting are constantly improving.

CDN’s at the edge made serving our SPA’s static assets easy and fast. It’s becoming feasible to move run-times and data to the edge now too.

This is creating a new runtime layer outside the browser, but still as close to users as possible.

This makes it easier to move a lot things currently done in the browser back to servers. While somewhat mitigating the network latency tradeoff that’s made by doing that.

Ideas like React server components are exploring the concept of streaming server component output to browsers from this layer.

New Javascript run times like Deno and Bun are cropping up to simplify and streamline the Javascript ecosystem, and are built for this new world of edge run times optimized for speed and fast start up times.

It’s also led to application frameworks adopting standard web APIs to run in this layer. With serverless functions and streaming architectures are being explored.

Streaming is a big theme here. It allows flushing HTML early, so the browser can progressively render it as it is received. And start chewing through any render-blocking resources like CSS and JS while the backend fetches any data simultaneously. This helps parallelize a lot of otherwise sequential round trips.

Recap

We covered a lot of ground, and we barely scratched the surface.

There’s no one universal answer to what is the best framework, architecture or pattern mentioned in this post and the countless others we didn’t mention.

It’s always a tradeoff against specific metrics. And knowing what to tradeoffs to make will depend on what you are building. Who your users are, and their usage patterns. And whatever other requirements around key user experiences (like performance budgets) are set.

For most of us, the truth will be somewhere in the middle. The great thing about the new wave of frameworks and innovation is that they provide levers to scale up and down as needed.

For those coming into the industry and those experienced alike it’s always a good bet to invest in the fundamentals.

The evolution of frameworks slowly push the native web further, removing the previous needs of frameworks to smooth its rough edges, and mitigating previous tradeoffs – allowing us to adopt its features natively more and more.

References and resources