“Our intelligence is what makes us human, and AI is an extension of that quality”. -Yann LeCun, Professor at NYU

Introduction to Generative AI and Markov Chains

Generative AI is a popular topic in the field of Machine Learning and Artificial Intelligence, whose task, as the name suggests, is to generate new data.

There are quite a few ways in which such AI Models are trained , like using Recurrent Neural Networks, Generative Adversarial Networks, Markov Chains etc.

In this article, we are going to look at Markov Chains and understand how they work.We won’t dive deep into the mathematics behind it, as this article is simply meant to get you comfortable with the concept of Markov Chains

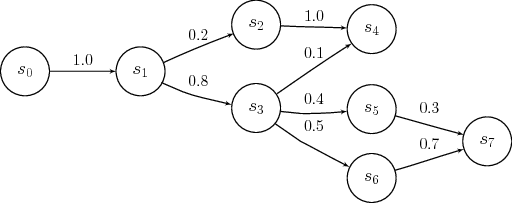

Markov Chains are models which describe a sequence of possible events in which probability of the next event occuring depends on the present state the working agent is in.

This may sound confusing, but it’ll become much clearer as we go along in this article. We will be covering the following topics:

- Concept of Markov Chains

- Application of Markov Chains in Generative AI

- Limitations of Markov Chains

Concept Of Markov Chains

A Markov Chain model predicts a sequence of datapoints after a given input data. This generated sequence is a combination of different elements based on the probability of each them occuring immediately after our test data. The length of the input and output data sequences depends on the order of the Markov Chain — which will be explained later in this article.

To explain it simply, lets take an example of a Text Generation AI. This AI can construct sentences if you pass a test word and specify the number of words the sentence must contain.

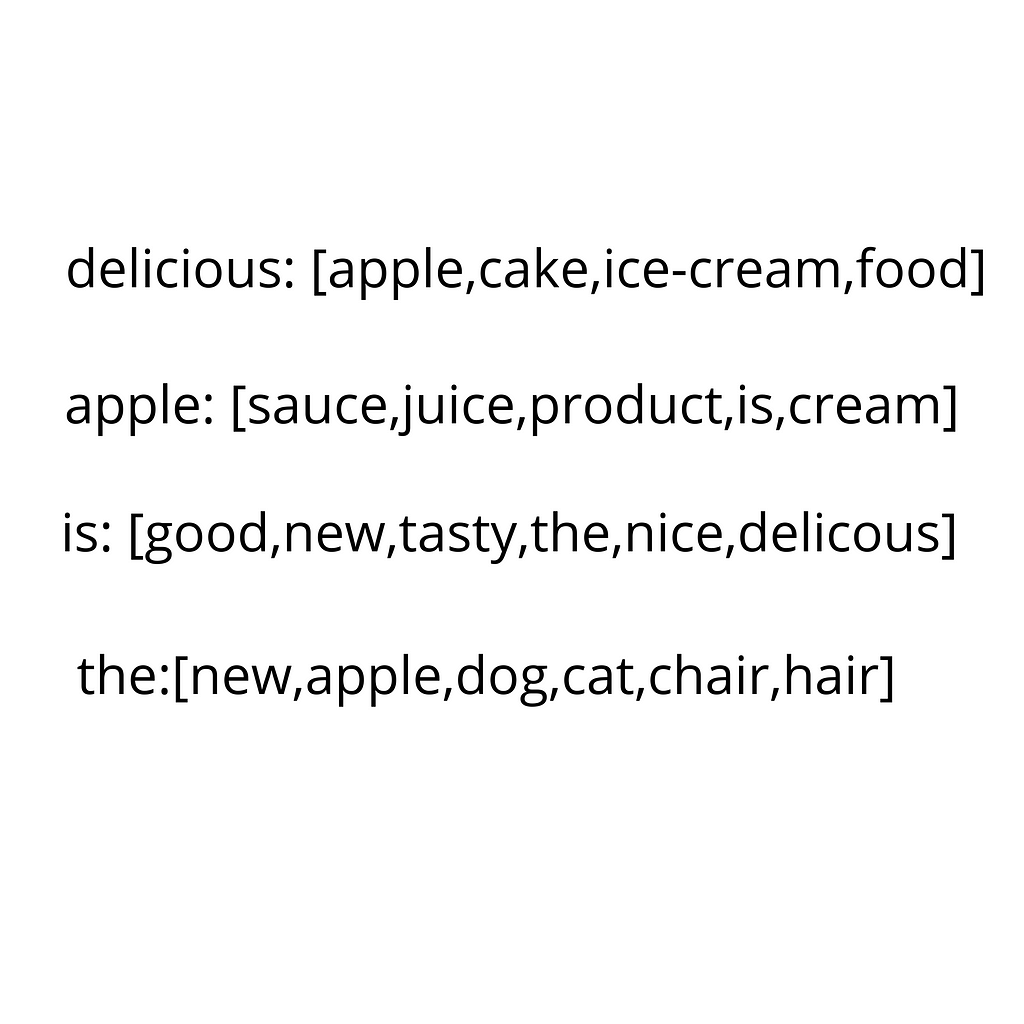

Before going further, lets first understand how a Markov Chain model for text generation is designed. Suppose you want to make an AI that generates stories in the style of a certain author. You would start by collecting a bunch of stories by this author. Your training code will read this text and form a vocabulary i.e list out the unique words used in the entire text.

After this, a key-value pair is created for each word, where the key is the word itself, and the value is a list of all words that have occured immediately after this key. This entire collection of key-value pairs is basically your Markov Chain model.

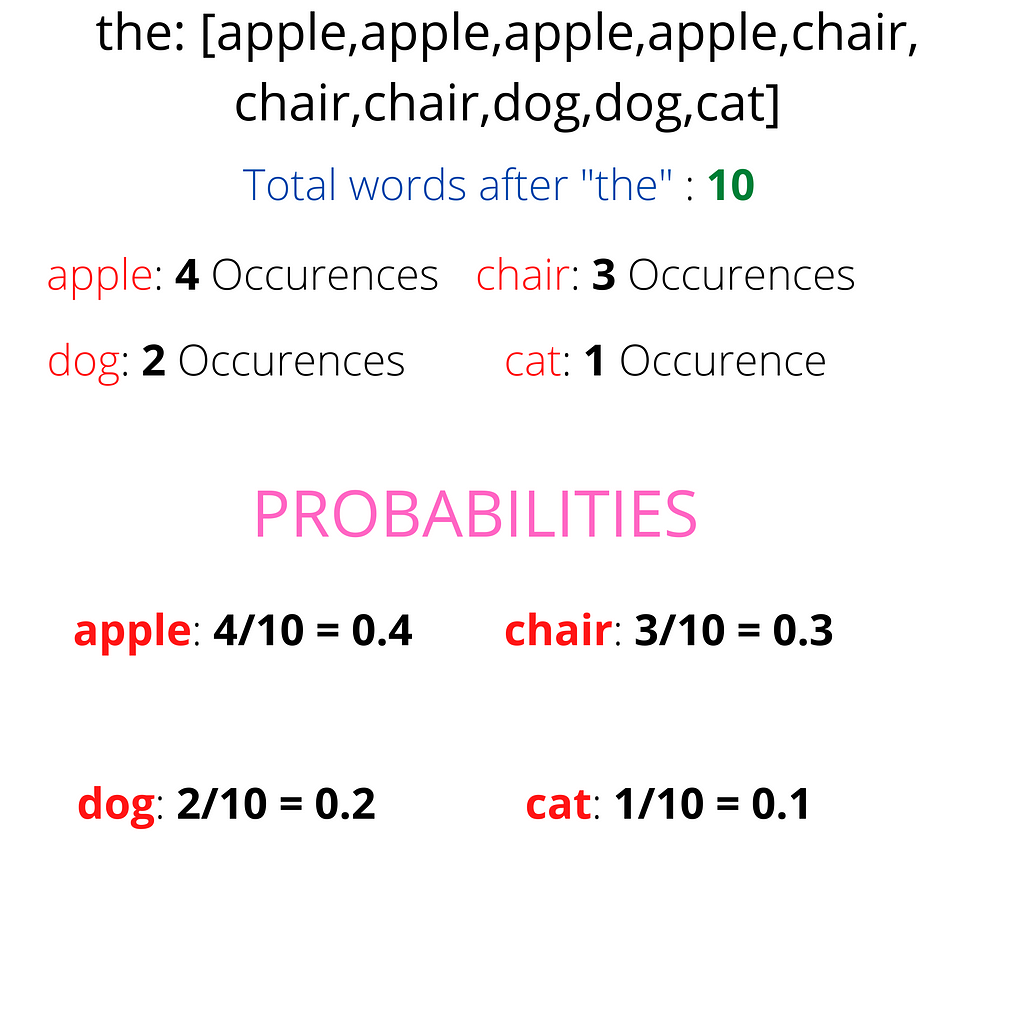

Now, lets get on with our example of a Text Generation AI. Here’s a snippet of an example model

This is just a snippet. For the sake of simplicity, I have shown key-value pairs for only 4 words.

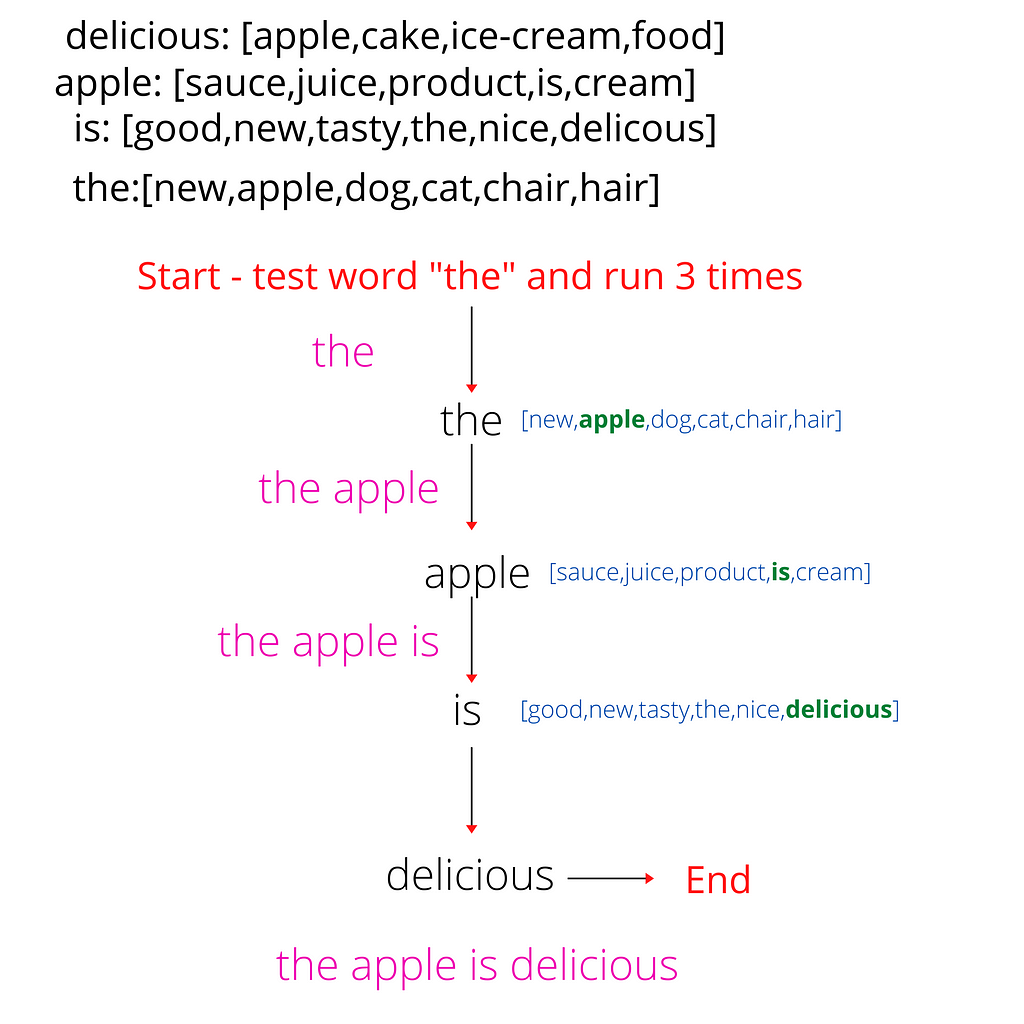

Now, you pass it a test word, say “the”. As you can see from the image, the words that have appeared after “the” are “new”, “apple”, “dog”, “cat”,“chair” and “hair”. Since they all have occured exactly once, there is an equal chance of either of them appearing right after “the”.

The code will randomly pick a word from this list. Lets say it picked “apple”. So, now you’ve got a part of a sentence : “the apple”. Now the exact same process will be repeated on the word “apple” to get the next word. Lets say it is “is”.

Now the portion of sentence you have is : “the apple is”. Similarly, this process is run on the word “is” and so on until you get a sentence containing your desired number of words (which is the number of time you will run the program in a loop). Here’s a simplified chart of it all.

As you can see, our output from the test word “the” is “the apple is delicious”. It is also possible that a sentence like “the chair has juice” (assuming “has” is one of the values in the key-value list of the word “chair”) is formed.

The relevance of the generated sentences will directly depend on the amount of data you have used for training. The more data you have, the more vocabulary your model will develop.

One of the major things to note is that the more number of times a particular word occurs after a certain test word in your training data, the higher is the probability of it occuring in your final output.

For example, if in your training data , the phrase “the apple” has occured 100 times, and “the chair” has occured 50 times, in your final output, for the test word “the”, “apple” has a higher probability of occuring than “chair”.

This is based on the basic probability rules

Now, lets look at a term we came across earlier in this section : Order of a Markov Chain

Order Of A Markov Chain

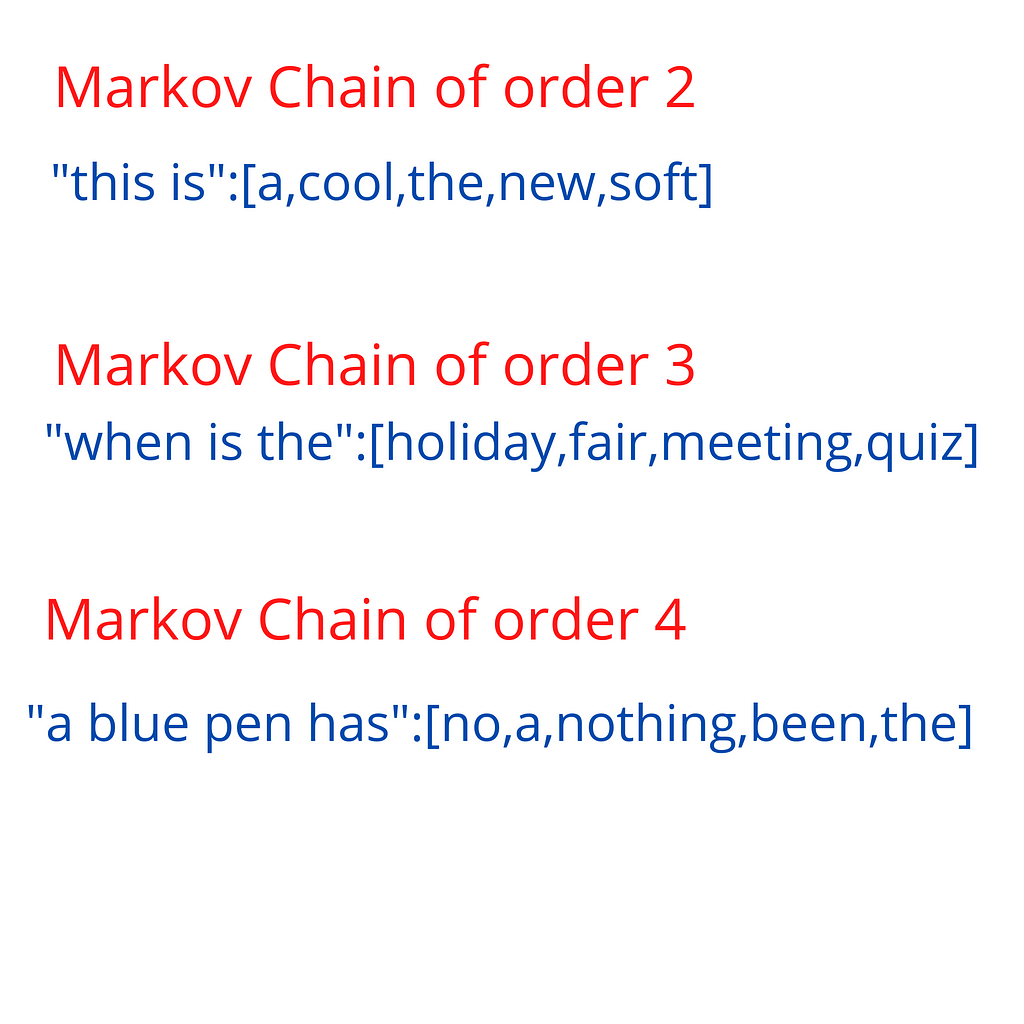

The order of the Markov Chain is basically how much “memory” your model has. For example, in a Text Generation AI, your model could look at ,say,4 words and then predict the next word. This “4” is the “memory” of your model, or the “order of your Markov Chain”.

The design of your Markov Chain model depends on this order. Lets take a look at some snippets from models of different orders

This is the basic concept and working of Markov Chains.

Lets take a look at some ways you can apply Markov Chains for your Generative AI projects

Application of Markov Chains in Generative AI

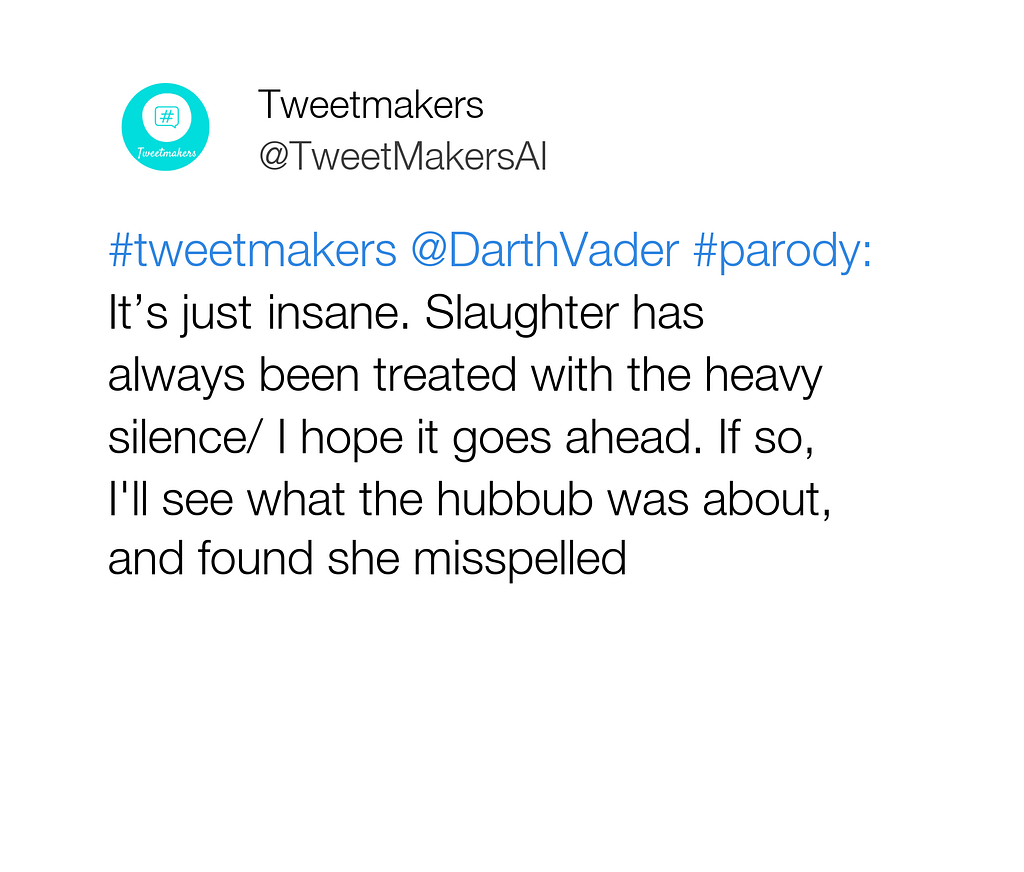

“Talking to yourself afterwards is ‘The Road To Success’. Discussing the Challenges in the room makes you believe in them after a while”

—Generated by TweetMakersAI

Markov Chains are a great way to implement a ML code, as training is quite fast, and not too heavy on an average CPU.

Although you won’t be able to develop complex projects like face generation like that made by NVIDIA, there’s still a lot you can do with Markov Chains in Text Generation.

They work great with text generation as there isn’t much effort required to make the sentences make sense. The thumb rule (as is for most ML algorithms) is that the more relevant data you have, the higher accuracy you will achieve.

Here are a few applications of Text Generation AI with Markov Chains

- Chat Bot: With a huge dataset of conversations about a particular topic, you could develop your own chatbot using Markov Chains. Although they require a seed (test word) to begin the text generation, various NLP techniques can be used to get the seed from the client’s response. Neural Networks work the best when it comes to chat bots, no doubt, but using Markov Chains is a good way for a beginner to get familiar with both the concepts — Markov Chains, and Chat Bots.

- Story Writing: Say your language teacher asked you to write a story. Now, wouldn’t it be fun if you were able to come up with a story inspired by your favourite author? This is the easiest thing to do with Markov Chains. You can gather a large dataset of all stories/books written by an author (or more if you really want to mix different writing styles), and train a Markov Chain model on those. You will be surprised by the result it generates. It is a fun activity which I would highly recommend for Markov Chain Beginners.

There are countless things you can do in Text Generation with Markov Chains if you use your imagination.

A fun project that uses Generative AI, is TweetMakers. This site generates fake tweets in the style of certain Twitter users.

As an AI enthusiast, and a meme lover, I believe meme creation is going to be a major application for Generative AI. Check out my blog about the sites which have already started doing so.

Although there’s a lot you can do with Markov Chains , they do have certain limitations. Lets have a look a few of them.

Limitations Of Markov Chains

In text generation, Markov Chains can play a huge role. However, there are some minor restrictions to it:

- The seed should exist in the training data: The seed (test phrase or word) which you pass in order to generate a sentence, must exist in the key-value pairs collection of your Markov Model. This is because the way these Chains work is that they get the next word based on which words have occured after the seed and with what frequency. This is the reason most Text Generation AI bots don’t take any user input, instead select a seed from the existing data.

- Might Generate Incomplete Sentences: Markov Chains cannot understand whether a sentence is complete or not. It’ll simply generate words the number of time you run the code in a loop. For example, a sentence like “This is a new” can be generated. Very clearly, this sentence is incomplete. Although Markov Chains cannot tell you if the sentence is complete or not, various NLP techniques can be used to get a complete sentence as an output.

Markov Chains are a basic method for text generation. Although their output can directly be used for various purposes, you will inevitably have to do some post-processing on the output to achieve complex tasks

Conclusion

Markov Chains are a great way to get started with Generative AI, with a lot of potential to accomplish a wide variety of tasks.

Generative AI is a popular topic in ML/AI, so it is a good idea for anyone looking to make a career in this field to get into it, and for absolute beginners, Markov Chains is the way to go.

I hope this article was helpful and you enjoyed it 🙂

Machine Learning Algorithms: Markov Chains was originally published in The Startup on Medium, where people are continuing the conversation by highlighting and responding to this story.

from The Startup – Medium https://medium.com/swlh/machine-learning-algorithms-markov-chains-8e62290bfe12?source=rss—-f5af2b715248—4